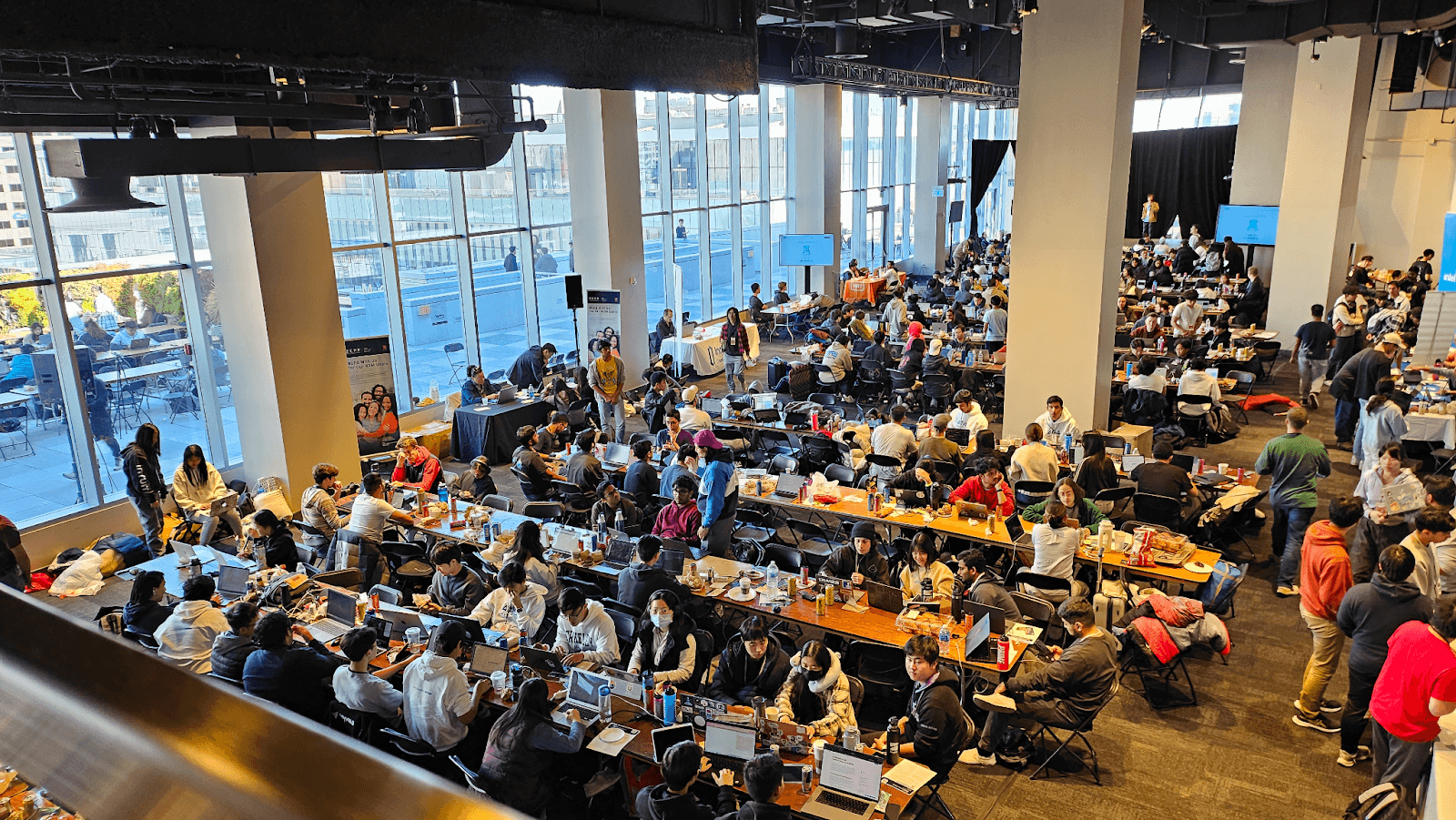

Zilliz at CalHacks 2023

I’m writing this from the heart of San Franciso’s Metreon on a sunny Sunday afternoon. Since Friday afternoon (Oct 27-29), CalHacks has been running. With $137,650 in prize money, plus sponsor awards, there were:

1949 people on the CalHacks Slack chat during the event.

Over 1000 students from around the world coming together in-person to hack.

764 students officially competing in the event.

240 competition project submissions.

12 outstanding projects competing for Zilliz’ sponsor prize “Best Use of Milvus”.

Image source: CalHacks 2023.

Image source: CalHacks 2023.

We just awarded our Zilliz prizes and the projects were innovative and impressive!

Best Use of Milvus - First Place

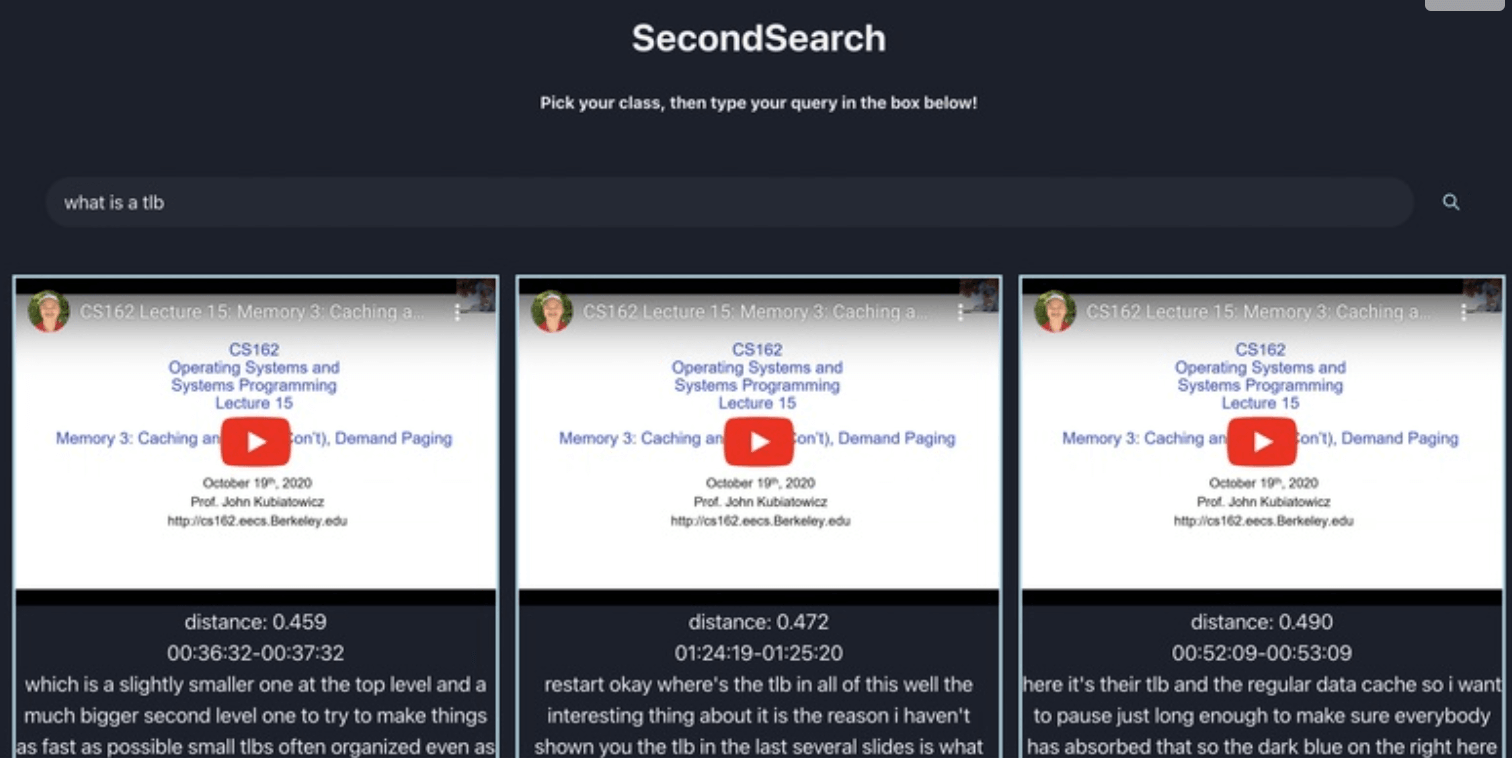

First place went to the project Second Search. By

Catherine Jin (BS EECS ‘25 UC Berkeley)

Kanishk Garg (BS CS ‘24 University of Texas at Dallas)

What they did: They took CS162 lecture videos that were uploaded to Youtube. Then they took the video caption text of those videos, embedded the text, and saved the embedding vectors in Milvus.

Users could type a topic they wanted to know about. I typed “Explain LRU cache”. My coworker typed “Explain Linux sockets”. The search would return 3 video segments. Each video was linked to the second in that video where the professor was explaining the searched-for topic. All you had to do was click “Play” to hear that section of the video. In addition, a text summary was displayed below the video, showing the 1) vector search distance metric, 2) timestamp in video being played, 3) text summary what the professor was saying in that video segment.

We thought the UI was simple to use, had a real-time feel (~1 second response time), it worked (showed videos with relevant content), used Milvus in a creative way, and sounded very useful! We would love to see a product like this available on YouTube!

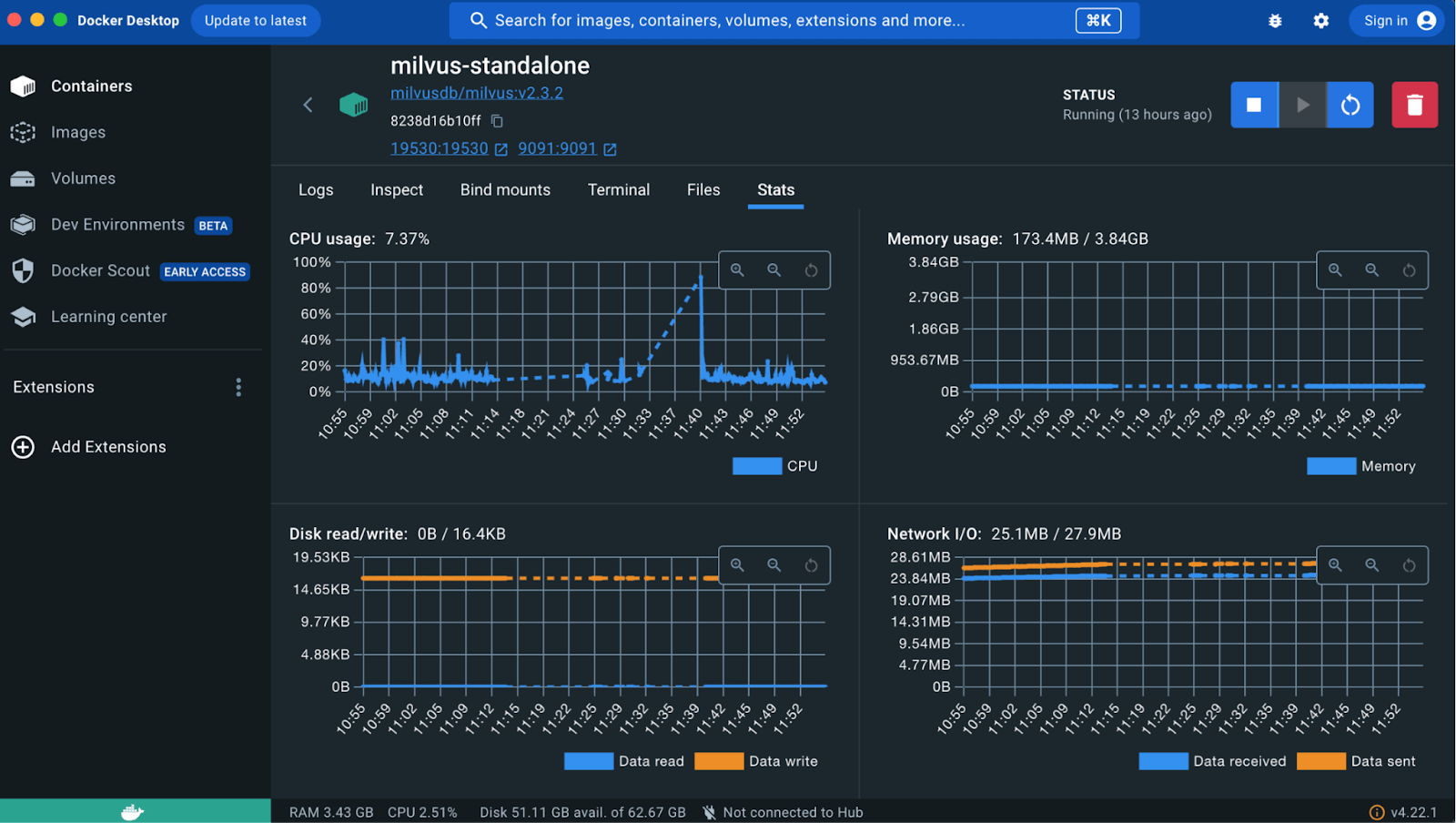

How they built it: They built SecondSearch on a locally-running Milvus open-source vector database, using OpenAI to generate the embeddings, then completed the product with a companion React frontend built with Chakra UI component library. The backend was made using FastAPI and the Milvus docker containers were populated using Jupyter notebooks. Milvus is even faster on Zilliz (cloud), but there were wifi difficulties in the room during the hackathon.

Best Use of Milvus - Second Place

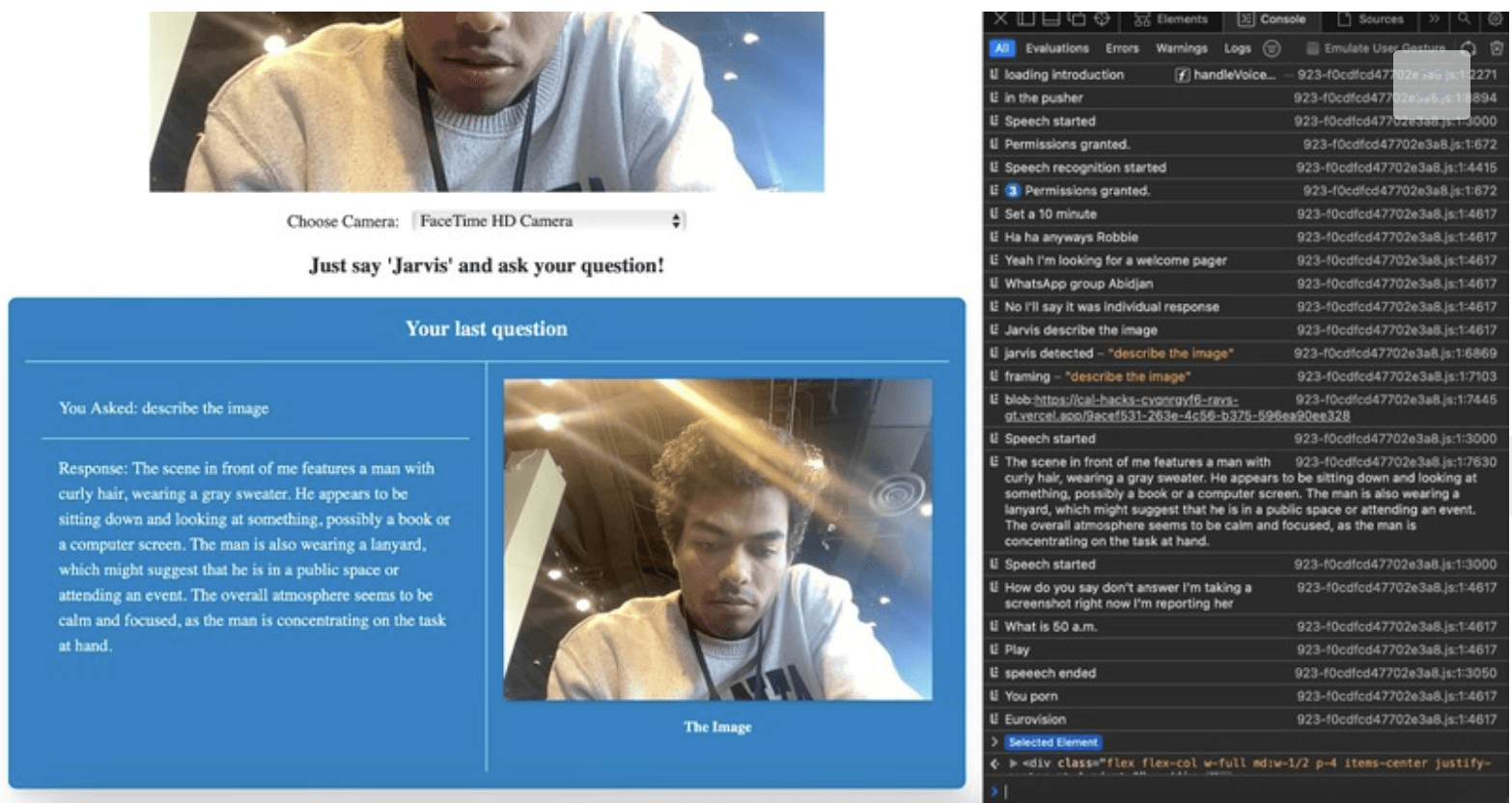

Second place went to the project Jarvis. By

Dhruv Roongta (BS CS ‘27 Georgia Institute of Technology)

Arnav Chintawar (BS CS ‘27 Georgia Institute of Technology)

Shanttanu Oberoi (BTech Engineering Physics ‘26 IIT Bombay)

Sahib Singh (BS CS ‘27 Georgia Institute of Technology)

What they did: They built a system that describes what a camera sees based on a user’s request or questions about what is going on visually. The user’s request is converted speech-to-text (similar to Siri/Alexa). At the same time the image from the camera is converted into text. Finally text-to-speech can describe what is going on to a visually-impaired person.

The model could recognize facial expressions, providing blind people with social cues. Users could ask questions that may require critical reasoning, such as what to order from a menu or navigating complex public-transport-maps. The system was extended to Amazfit, enabling users to get a description of their surroundings or identify the people around them with a single press.

I walked up to the camera, it was able to tell I was a woman with glasses, probably a judge.

How they built it: They used Hume, LlaVa, OpenCV, Resnet-50, and next.js to create the frontend which was established with ZeppOS using Amazfit smartwatch. They used Zilliz (cloud) to host Milvus online, and stored a dataset of images and their vector embeddings. Each image was classified as a person to build an identity-classification tool using Zilliz's reverse-image-search tool. A minimum threshold was set, below which people's identities were not recognized, i.e. their data was not in Zilliz. The estimated accuracy of this model was around 95%.

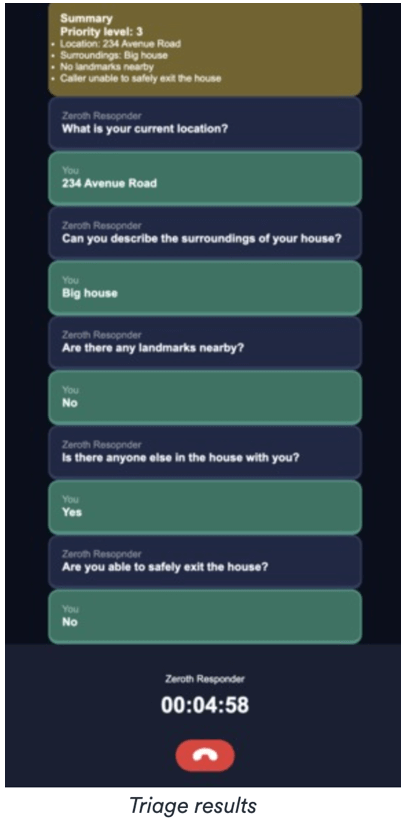

Best Use of Milvus - Honorable Mention One

Leo Liu (BS CS ‘26 U Michigan College of Engineering)

Brian Travis (BE CS ‘25 U Michigan College of Engineering)

What they did: Following 911-protocol, they built an AI 911 agent that listens to what a caller is saying to judge the situation, ask follow-up questions, find out if anyone is in immediate danger, assess, and classify the situation, assigning an urgency. This urgency is used as the 0th step to prioritize resource allocations. The motivation was that the recent Lahaina, HI fires supposedly took 45 minutes to dispatch emergency services from the first reported call.

During the demo, simulating a Lahaina caller trapped by fire, this system was able to assess the danger situation within about 15 seconds.

calhacks-2023-image4.png

calhacks-2023-image4.png

How they built it: They stored 911 training manual pdfs into Milvus vector database, then queried it in conjunction with LLMs (OpenAI) to create responses to the caller and assess the situation. Classic RAG pattern. Local Milvus server running on Docker, using node.js SDK. Full stack: client, server, and Milvus DB using FastAPI and NextJS built on top of React.

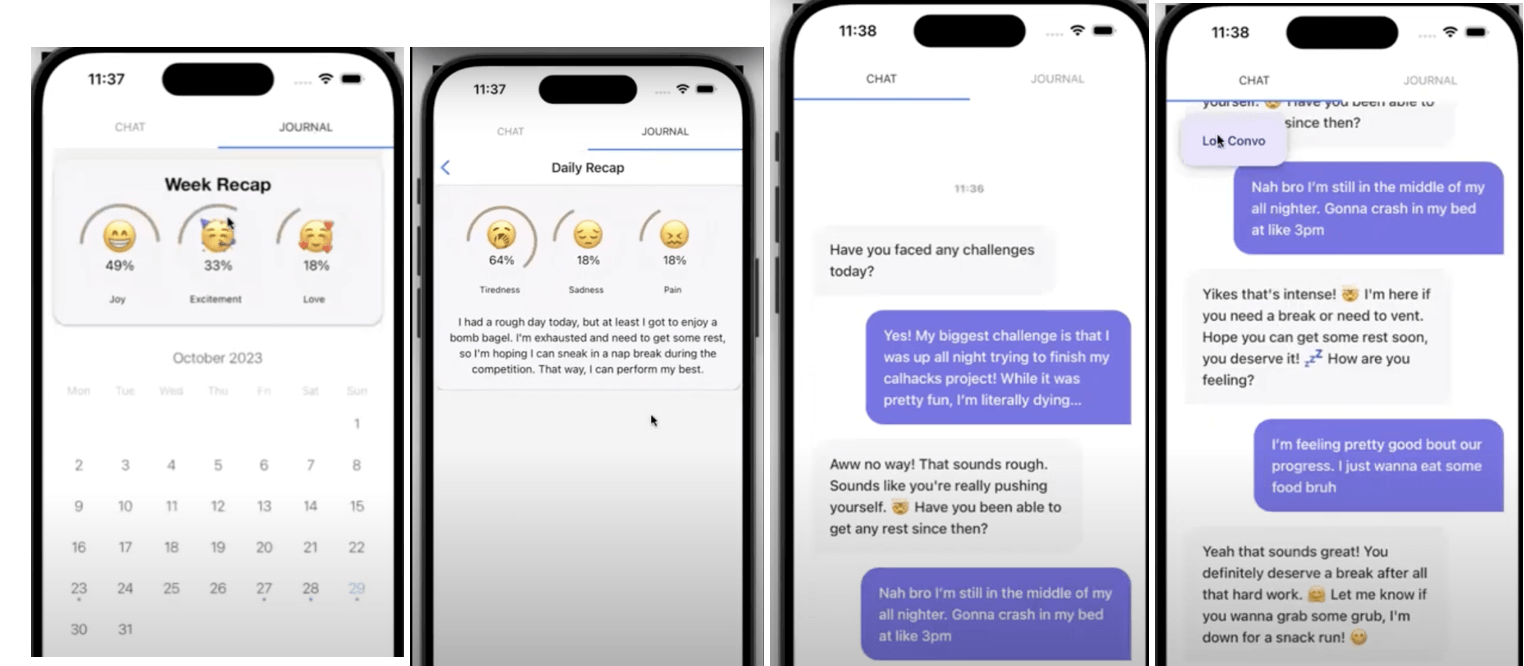

Best Use of Milvus - Honorable Mention Two

Mental Maps by

Anirudh Pai (BS EECS ‘26 UC Berkeley)

Kushal Kodnad (BS EECS ‘26 UC Berkeley)

Rushil Desai (BS EECS ‘26 UC Berkeley)

Raghav Punnam (BS EECS ‘25 UC Berkeley)

What they did: They created an AI chatbot that checks in on your feelings and experiences. Each interaction is subject to sentiment analysis, enabling the app to extract the user's prevailing mood. At the end of each week, the application analyzes your emotions, generates weekly summaries, and helps you gain a better understanding of your mental well-being—all in an effort to foster self-awareness and promote better mental health.

How they built it: They used Hume API for text-based sentiment analysis to gauge the user's emotional responses to the chat interface each day. BERT Hugging Face model was used to convert user responses into high-dimensional vector embeddings which were stored using the Milvus vector database, facilitating similarity searches between responses. For the frontend, they utilized React Native to build an iOS application. The front-end Flask REST server used "axios" for sending HTTP requests. Application navigation was controlled with database navigation routers, which securely store user sessions.

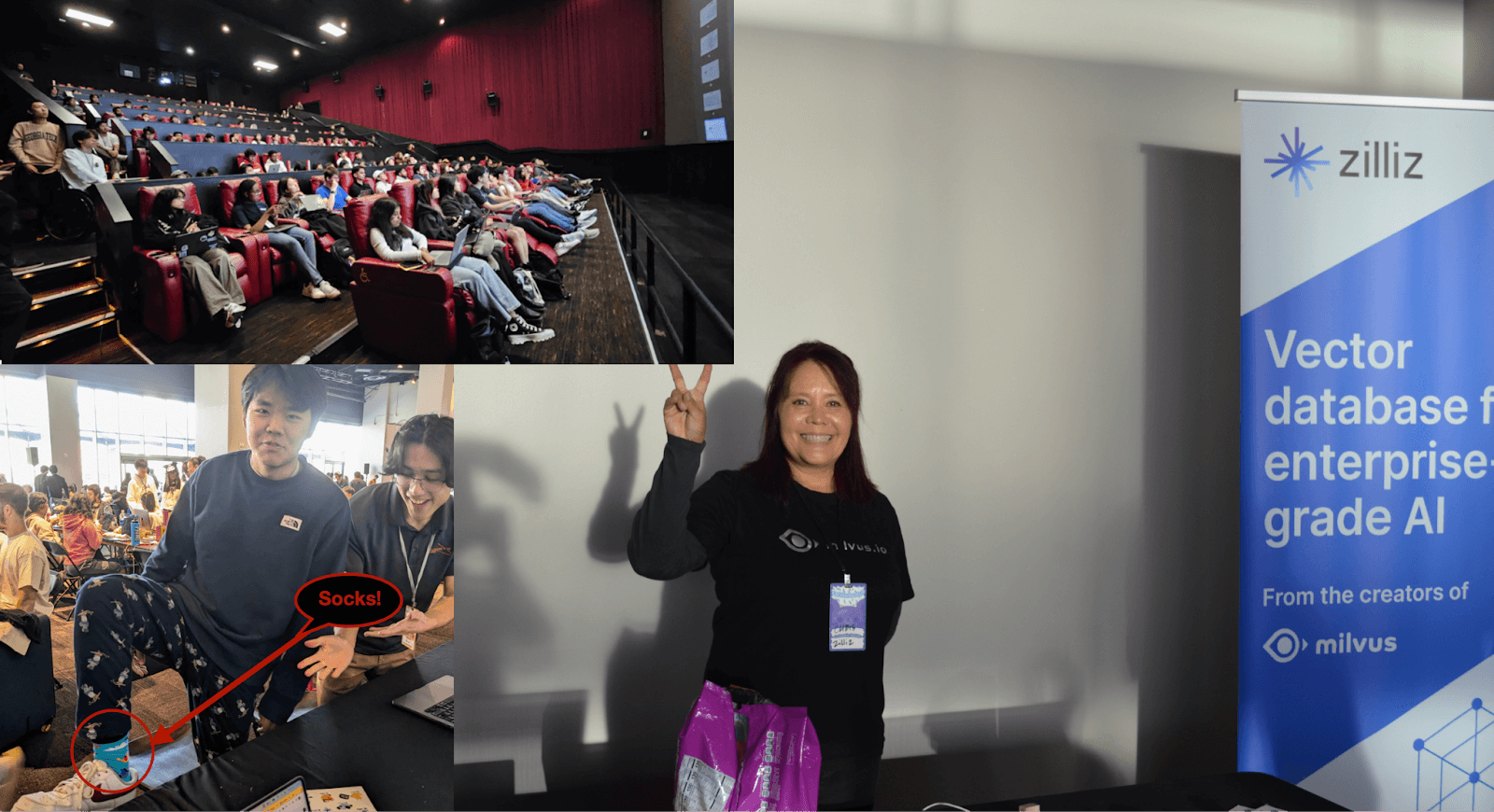

Thank you to all the CalHacks organizers! We were truly impressed by all the projects, and our sponsorship experience with Zilliz was fantastic (special thanks to Chris Churilo for getting us involved and Chris Chou, CalHacks sponsor organizer).

Top left: workshop in a movie theater. Right: Chris Churilo at the Zilliz sponsor table. Bottom left: Zilliz swag socks, image by author. All other image sources: CalHacks 2023.

Top left: workshop in a movie theater. Right: Chris Churilo at the Zilliz sponsor table. Bottom left: Zilliz swag socks, image by author. All other image sources: CalHacks 2023.

- Best Use of Milvus - First Place

- Best Use of Milvus - Second Place

- Best Use of Milvus - Honorable Mention One

- Best Use of Milvus - Honorable Mention Two

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Zilliz Cloud Delivers Better Performance and Lower Costs with Arm Neoverse-based AWS Graviton

Zilliz Cloud adopts Arm-based AWS Graviton3 CPUs to cut costs, speed up AI vector search, and power billion-scale RAG and semantic search workloads.

Milvus WebUI: A Visual Management Tool for Your Vector Database

Milvus WebUI is a built-in GUI introduced in Milvus v2.5 for system observability. WebUI comes pre-installed with your Milvus instance and offers immediate access to critical system metrics and management features.

Vector Databases vs. Hierarchical Databases

Use a vector database for AI-powered similarity search; use a hierarchical database for organizing data in parent-child relationships with efficient top-down access patterns.