How to Build an Enterprise-Ready RAG Pipeline on AWS with Bedrock, Zilliz Cloud, and LangChain

Retrieval-Augmented Generation (RAG) has quickly become the backbone of enterprise-grade LLM solutions—nearly 90% of companies rely on it to ground LLMs with trusted, domain-specific knowledge. But the reality is more complicated: the RAG ecosystem has exploded with options. LLMs, embedding models, orchestration frameworks, and vector databases each come with their own integration patterns, leaving teams struggling to piece together solutions that work within enterprise constraints.

For organizations deeply invested in AWS, this challenge is even sharper. You can’t just rip out existing infrastructure or bypass established security and compliance policies. What you need is a RAG architecture that integrates seamlessly with your AWS environment, leverages enterprise-ready services, and remains future-proof.

In this tutorial, we’ll walk through building exactly that: an enterprise-ready RAG pipeline using AWS Bedrock (Nova + Titan models), Zilliz Cloud as the vector database, and LangChain for orchestration. By the end, you’ll have a practical, secure, and production-ready foundation you can deploy directly into your AWS stack—no compromises, no workarounds.

How We Design RAG for Scale

Before we dive into code, let's understand what we're building and why it solves real enterprise problems.

Traditional LLMs hit two major walls in enterprise settings. Their knowledge stops at training time—no access to your latest reports, customer data, or industry developments. Plus, they hallucinate frequently with no way to trace their reasoning. Not exactly what you want powering customer-facing applications.

RAG changes the game entirely. Instead of retraining massive models, you retrieve relevant information first, then generate responses based on that context. The benefits are immediate: 25-40% better accuracy, 60%+ fewer hallucinations, and complete response traceability. Your AI suddenly knows about last quarter's earnings and can cite its sources.

Our enterprise RAG system follows the proven MVC (Model-View-Controller) architecture pattern:

Model layer: Handles the heavy lifting—document processing, embeddings, vector storage, and LLM inference

View layer: Manages user interfaces and API responses

Controller layer: Orchestrates the workflow through Lambda functions and event handling

Five core engines power our implementation:

Query Processing Engine: Transforms user questions into optimized search queries

Vector Retrieval Engine: Finds relevant content using Zilliz Cloud's semantic search

Reranking Module: Prioritizes results using relevance and business rules

Generation Engine: Synthesizes context into accurate responses via AWS Bedrock

Event-Driven Backbone: Keeps everything loosely coupled through Amazon EventBridge

The Technology Stack We’ll Use

Building enterprise RAG isn’t just about picking the “best” individual tools—it’s about assembling technologies that integrate seamlessly into your AWS ecosystem. In this tutorial, we’ll combine AWS Lambda for compute, AWS Bedrock (Nova + Titan models) for embeddings and generation, Zilliz Cloud for vector search, and LangChain for orchestration.

AWS Lambda: Elastic Compute Foundation

Lambda gives us a serverless backbone: no servers to manage, instant scaling from zero to thousands of requests, and pay-per-execution pricing. Each RAG stage—document processing, vectorization, retrieval, and generation—runs as its own independent Lambda function. This design keeps the system modular, fault-tolerant, and cost-efficient.

AWS Bedrock: Flexible Model Hub

Bedrock provides access to 50+ serverless foundation models (plus 120+ marketplace options from Amazon, Anthropic, Meta, and more). Its killer feature? Model swapping without changing your application code. That means you can A/B test, optimize latency vs. cost, or adopt new models without re-architecting.

Zilliz Cloud: Most Performant Enterprise Vector Database

Built on open-source Milvus, Zilliz Cloud eliminates index tuning guesswork with AutoIndex, which dynamically adapts to your data. Its Cardinal search engine delivers up to 10x faster performance than traditional vector databases while scaling seamlessly to billions of vectors—critical for enterprise-scale deployments.

LangChain: Orchestration Layer

LangChain ties it all together—managing the flow between embedding, retrieval, and generation. With its AWS integrations and flexible abstractions, it keeps our architecture clean, modular, and production-ready.

With our stack in place, it’s time to get hands-on and start building.

Getting Started with Building an Enterprise-Ready RAG on AWS

Setting Up AWS CDK Infrastructure

We'll use AWS CDK (Cloud Development Kit) to define your entire stack—Lambda functions, API Gateway, S3 buckets, CloudFront—and deploy it consistently across environments. CDK lets you version and review your infrastructure just like application code.

# Core Lambda function configuration

lambda_function = lambda_.Function(

self, "RAGQueryFunction",

runtime=lambda_.Runtime.PYTHON_3_9,

memory_size=3008,

timeout=Duration.seconds(30),

reserved_concurrency=100,

environment={

"ZILLIZ_ENDPOINT": self.zilliz_endpoint,

"BEDROCK_MODEL_ID": "amazon.nova-pro-v1:0"

}

)

The CDK Bootstrap step creates foundational AWS resources: S3 buckets for deployment artifacts, IAM roles for permissions, and SSM parameters for configuration. You can then deploy separate stacks for development, staging, and production environments.

Connecting to Zilliz Cloud

Setting up Zilliz Cloud involves three steps: create a collection, optimize indexing, and establish connections. We're using 1024-dimensional vectors with HNSW indexing for the optimal balance between search accuracy and speed.

# Zilliz connection configuration

connections.connect(

alias="default",

uri=ZILLIZ_ENDPOINT,

token=ZILLIZ_TOKEN,

timeout=30

)

# Create optimized collection

collection = Collection("rag_collection")

index_params = {

"metric_type": "IP",

"index_type": "HNSW",

"params": {"M": 16, "efConstruction": 128}

}

Use partitions to organize data by document type or business area for better search performance when handling large document collections.

Streamlining Your Development Workflow

The Makefile provides unified commands for installing dependencies, running tests, deploying to different environments, and cleaning up resources.

# Standardized development process

install: # Install dependencies

test: # Run tests

lint: # Code checking

deploy: # Deploy application

clean: # Clean environment

CI/CD pipelines handle code quality checks, type validation, and automated testing.

Building the Core Features

Document Processing Pipeline

The pipeline processes documents through four stages: parsing, content cleaning, intelligent chunking, and metadata extraction.class DocumentProcessor:

class DocumentProcessor:

def process(self, document):

# Document Parsing

parsed_content = self.parse_document(document)

# Content cleaning and preprocessing

cleaned_text = self.clean_content(parsed_content)

# Intelligent chunking

chunks = self.chunk_text(cleaned_text,

chunk_size=1000,

overlap=100)

# Metadata extraction

metadata = self.extract_metadata(document)

return processed_chunks

Our chunking strategy uses semantic-aware segmentation that respects paragraph boundaries and keeps related information together. Chunk sizes automatically adjust based on document type.

Vectorization and Storage

AWS Bedrock's Titan Embeddings processes documents in batches for efficiency. Vector caching prevents re-computing embeddings for previously processed content.

class VectorProcessor:

def __init__(self):

self.embedding_model = TitanEmbeddings()

self.batch_size = 32

def vectorize_batch(self, texts):

# Batch vectorization

embeddings = self.embedding_model.embed_documents(texts)

# Vector normalization

normalized_embeddings = self.normalize_vectors(embeddings)

return normalized_embeddings

The tiered storage approach keeps frequently accessed vectors in high-speed storage while archiving older content to cost-optimized tiers.

Hybrid Retrieval Strategy

We combine vector similarity with keyword matching through a multi-stage process: initial broad retrieval, then precision ranking for the best results.

class HybridRetriever:

def retrieve(self, query, top_k=10):

# Vector retrieval

vector_results = self.vector_search(query, top_k*2)

# Keyword retrieval

keyword_results = self.keyword_search(query, top_k*2)

# Result fusion

merged_results = self.merge_results(

vector_results, keyword_results

)

# Reranking

reranked_results = self.rerank(query, merged_results)

return reranked_results[:top_k]

Cross-Encoder models handle reranking to identify the most relevant results. Context window optimization ensures you get the right amount of information for each query.

LangChain Integration

LangChain orchestrates the retrieval and generation process using proven RAG templates from LangChain Hub.

from langchain.chains import RetrievalQA

from langchain.retrievers import VectorStoreRetriever

# Build RAG chain

qa_chain = RetrievalQA.from_chain_type(

llm=BedrockLLM(model_id="amazon.nova-pro-v1:0"),

chain_type="stuff",

retriever=ZillizRetriever(

collection=collection,

search_params={"top_k": 5}

),

return_source_documents=True

)

# Execute query

result = qa_chain.invoke({"query": user_question})

Memory management maintains conversation context for multi-turn discussions. Streaming responses display results in real-time instead of waiting for complete answers. Error handling ensures graceful recovery when issues occur.

Serverless Architecture Deployment

Lambda Function Design Excellence

Each Lambda function follows single responsibility principles, focusing on specific business logic. Our core functions—document processing, vectorization, retrieval, and generation—deploy and scale independently for maximum flexibility.

# Query processing Lambda function

def lambda_handler(event, context):

try:

# Initialize connections (outside handler)

query = event['query']

# Vector retrieval

retriever = ZillizRetriever()

relevant_docs = retriever.search(query, top_k=5)

# LLM generation

llm = BedrockLLM()

response = llm.generate(query, relevant_docs)

return {

'statusCode': 200,

'body': json.dumps(response)

}

except Exception as e:

logger.error(f"Error: {str(e)}")

return error_response(e)

Memory allocation varies by function responsibility: query functions get 1GB, document processing functions receive 2GB for optimal cost-performance balance. Timeout configurations reflect operational needs: 30 seconds for queries, 300 seconds for document processing.

Reserved concurrency with 100 instances for query functions eliminates cold start impact on critical user-facing operations.

API Gateway Configuration

API Gateway serves as our unified system entry point, providing RESTful interfaces with comprehensive request routing. Security and stability come from carefully configured rate limiting, authentication, and CORS policies.

# API Gateway configuration

endpoints:

- path: /query

method: POST

integration: lambda

rate_limit: 1000/min

auth: IAM

- path: /documents

method: POST

integration: lambda

rate_limit: 100/min

auth: IAM

Intelligent caching at the API Gateway layer reduces backend load through strategic result caching. Request validation ensures input parameter integrity, protecting backend systems from invalid requests.

CloudFront CDN Optimization

CloudFront delivers global content distribution with sophisticated static resource caching, dramatically improving user access speeds worldwide. Our strategy incorporates dynamic-static separation, intelligent routing, and edge caching optimization.

# CloudFront cache configuration

cache_behaviors = [

{

'path_pattern': '/api/*',

'ttl': 300, # API response short-term cache

'headers': ['Authorization']

},

{

'path_pattern': '/static/*',

'ttl': 86400, # Static resources long-term cache

'compress': True

}

]

Edge location optimization ensures sub-100-millisecond response times for global users through strategic geographic distribution.

Performance Optimization

Cold Start Mitigation Strategies

Cold starts represent serverless architecture's primary challenge, addressed through our comprehensive multi-layer optimization approach. CloudWatch Events-based warm-up mechanisms maintain execution environment readiness for critical functions.

# Warm-up Lambda configuration

def warm_up_handler(event, context):

if event.get('source') == 'aws.events':

return {'statusCode': 200, 'body': 'warmed up'}

# Normal business logic

return business_logic(event, context)

Dependency optimization reduces cold start duration through minimized package sizes and lightweight library selection. Global scope connection pool initialization eliminates repeated connection overhead.

Provisioned Concurrency for critical functions completely eliminates cold start latency. Dynamic instance adjustment based on business patterns optimizes the performance-cost balance.

Intelligent Concurrent Processing

Our layered concurrency control applies different limits based on resource requirements. Lambda concurrency settings reflect function characteristics: lightweight query functions support high concurrency while resource-intensive document processing functions use controlled limits to prevent contention.

# Concurrency configuration example

functions_config:

query_function:

reserved_concurrency: 100

memory: 1024

document_processing:

reserved_concurrency: 10

memory: 2048

Asynchronous processing through SQS and SNS achieves task decoupling, preventing cascading synchronous call failures. Batch processing optimization aggregates similar tasks for enhanced resource utilization.

Multi-Tier Caching Architecture

Our three-tier caching system optimizes for different access patterns:

L1 Cache uses Lambda function memory (5-minute TTL, 100MB capacity), L2 Cache employs Redis cluster (1-hour TTL, 1GB capacity), and L3 Cache leverages S3 storage (1-day TTL, unlimited capacity).

class CacheManager:

def get(self, key):

# L1 cache query

if key in self.memory_cache:

return self.memory_cache[key]

# L2 cache query

value = self.redis_client.get(key)

if value:

self.memory_cache[key] = value

return value

# L3 cache query

return self.s3_cache.get(key)

Predictive cache warming leverages historical query patterns for proactive data loading. Intelligent cache invalidation maintains data consistency through both active updates and passive expiration strategies.

Strategic Cost Optimization

Fine-grained resource configuration drives on-demand billing optimization. Dynamic resource adjustment automatically tunes Lambda memory and timeout settings based on real-time load patterns.

Reserved Instances and Savings Plans for stable workloads deliver up to 72% compute cost savings. Spot Instances handle non-critical batch processing for additional cost reductions.

# Cost optimization configuration

cost_optimization = {

'lambda_memory_optimization': True,

'auto_scaling': True,

'reserved_capacity': {

'query_functions': 50,

'processing_functions': 5

}

}

Comprehensive Monitoring and Operations

Performance Metrics Monitoring

CloudWatch integration provides complete performance visibility across these critical metrics:

API Response Time maintains P50 < 1s, P95 < 3s, P99 < 5s, Success Rate stays > 99.9%, Concurrent Users receive real-time monitoring, Vector Retrieval Performance stays < 200ms, and LLM Generation Time remains < 2s.

# Custom metrics sending

def send_metrics(metric_name, value, unit='Count'):

cloudwatch = boto3.client('cloudwatch')

cloudwatch.put_metric_data(

Namespace='RAG/System',

MetricData=[{

'MetricName': metric_name,

'Value': value,

'Unit': unit,

'Timestamp': datetime.utcnow()

}]

)

Structured Log Analysis

JSON-formatted structured logging enables powerful querying and analysis capabilities. Comprehensive logs capture request IDs, timestamps, user context, performance metrics, and detailed error information.

Proactive Fault Management

AWS X-Ray provides end-to-end distributed tracing for rapid performance bottleneck identification. Automated alerting systems monitor key metrics with configurable thresholds and multi-channel notifications.

# Alert rule configuration

alerts = [

{

'metric': 'ResponseTime',

'threshold': 3000, # 3 seconds

'comparison': 'GreaterThanThreshold',

'action': 'sns_notification'

},

{

'metric': 'ErrorRate',

'threshold': 1, # 1%

'comparison': 'GreaterThanThreshold',

'action': 'auto_scaling'

}

]

Self-healing mechanisms leverage Lambda's automatic retry capabilities and Dead Letter Queue (DLQ) functionality for autonomous fault recovery. Capacity planning uses historical data and growth projections for proactive resource scaling.

From Tutorial to Production: Your RAG System is Ready

You've just built a complete enterprise RAG system that solves the integration headache stopping most RAG projects. This isn't another localhost demo—you have production-ready infrastructure running on AWS with document processing, vector storage through Zilliz Cloud, hybrid search, and LLM generation via Bedrock Nova models.

The modular design lets you deploy AI features immediately while iterating on components as needs evolve. You're building on proven enterprise patterns that scale with your business rather than experimental frameworks that break under load.

Ready to take this further? Deploy the system with your actual data and see what it can do. We'd love to hear about your experience and modifications.

Complete code repository: https://github.com/yincma/AWS-zilliz-RAG/tree/main

- How We Design RAG for Scale

- The Technology Stack We’ll Use

- Getting Started with Building an Enterprise-Ready RAG on AWS

- From Tutorial to Production: Your RAG System is Ready

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Why Teams Are Migrating from Weaviate to Zilliz Cloud — and How to Do It Seamlessly

Explore how Milvus scales for large datasets and complex queries with advanced features, and discover how to migrate from Weaviate to Zilliz Cloud.

Zilliz Cloud Update: Tiered Storage, Business Critical Plan, Cross-Region Backup, and Pricing Changes

This release offers a rebuilt tiered storage with lower costs, a new Business Critical plan for enhanced security, and pricing updates, among other features.

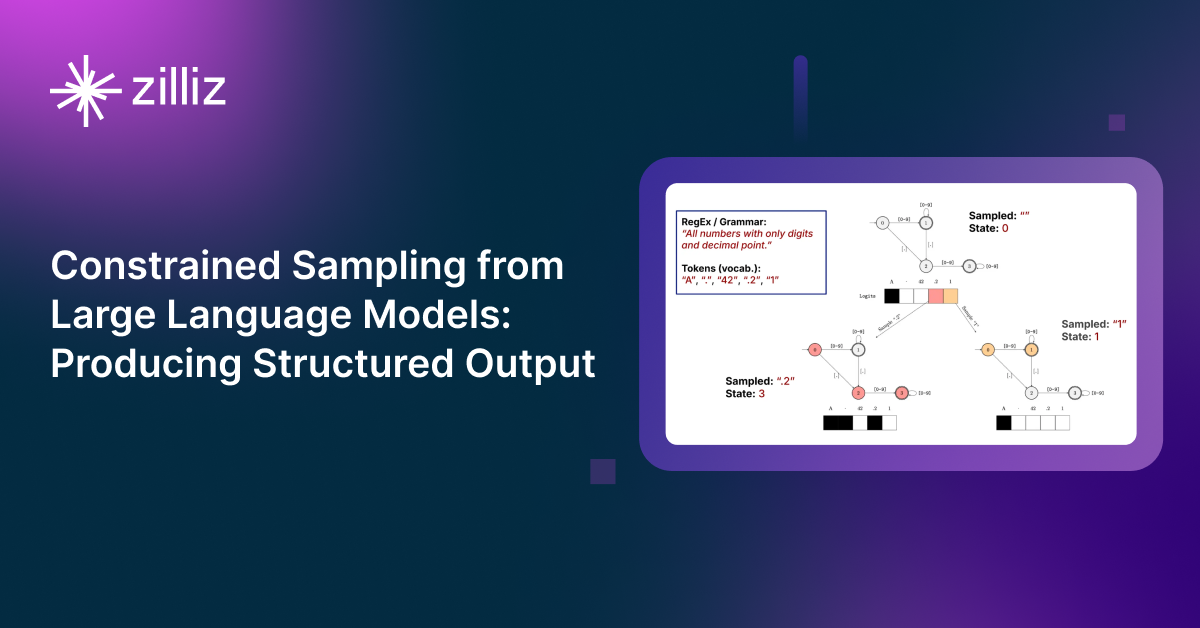

Producing Structured Outputs from LLMs with Constrained Sampling

Discuss the role of semantic search in processing unstructured data, how finite state machines enable reliable generation, and practical implementations using modern tools for structured outputs from LLMs.