Cosmos World Foundation Model Platform for Physical AI

When talking about GenAI, we mostly discuss its applications in non-physical fields, such as text summarization, Retrieval-Augmented Generation (RAG), internal chatbots, etc. This focus is understandable since the data required to train GenAI models for these tasks is relatively easy to obtain. There are abundant sources on the internet where we can access text, images, or other modalities of data to train GenAI applications.

On the other hand, the development of GenAI applications in physical fields is not as advanced. The thing is, we know that applying GenAI in the physical world could be highly beneficial for performing dangerous, tedious, and repetitive tasks across various industries. For example, an advanced GenAI system could be used for autonomous driving or robotic manipulation.

In this article, we’ll discuss a platform developed by the NVIDIA team called Cosmos. This platform serves as a foundation for fine-tuning GenAI models for physical-world use cases. So, without further ado, let’s get started!

What is Cosmos and Why It’s Necessary

There are a couple of reasons why the development of GenAI applications in the physical field is not as rapid as in the non-physical field.

The first reason is related to the scaling of training data. To be useful in the physical world, an AI model needs to be trained to predict possible next actions based on given instructions and its current situation. This means that training data should include not only images but also videos. Moreover, these videos cannot be random. The input videos must showcase relevant observations and actions that help train the model to respond appropriately in specific situations. This type of data is not only difficult to find but also complex to process.

The second reason is related to safety. As we know, training an AI model in the physical world can pose serious risks to people and the environment. A single misprediction by an AI system trained for autonomous driving, for example, could lead to a major traffic accident. Therefore, it is preferable to train the model in a digital twin of the physical world, where it can safely interact with its surroundings.

Cosmos is a platform developed by NVIDIA to address these challenges. It enables us to train and fine-tune physical AI (i.e., AI models designed for physical-world applications) in a digital twin environment. This allows us to observe the real-world behavior of physical AI models digitally, without the need to train or deploy them in the actual physical world.

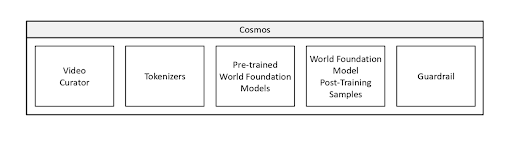

Figure 1. Components of Cosmos platform (Source)

The Cosmos platform consists of several components, each responsible for a specific stage in the training process of a physical AI model. These components include video curation, tokenization, pre-training, post-training, and guardrails.

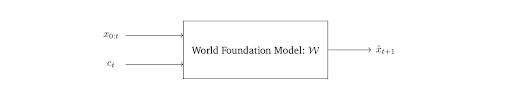

The outcome of running these components is a trained physical AI model. This model takes a sequence of visual observations (i.e., video) and a perturbation at a given time as inputs, producing a predicted future observation as the output. The input perturbation can take various forms, such as an action performed by the model or a text-based instruction.

By predicting future observations entirely within a digital environment, the model can be useful for various applications, including mimicking real-world behavior, policy observation and training, and synthetic video generation.

Figure 2. Inputs-output of Physical AI models trained with Cosmos platform (Source)

Components of Cosmos Platform and How They Work

As mentioned previously, Cosmos contains several components: video curation, tokenizer, pre-training, post-training, and guardrails. In this section, we will discuss each of these components, starting with the video curation component.

Video Curation

The primary goal of this component is to curate and filter raw video data obtained from both proprietary and open-source datasets. As we all know, video data often contains a lot of noise, so it must be filtered to ensure the quality of the final dataset used to train the physical AI.

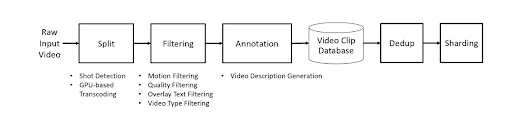

Figure 3. Workflow of video curation in Cosmos platform (Source)

To filter the data, the Cosmos platform follows several steps. The first step is the splitting process.

Cosmos uses a shot detection algorithm for video splitting. A raw video source is split into short clips of arbitrary length without shot changes. This guarantees that each video segment consists of coherent scenes that are useful for the model to learn from.

Based on the performance of different methods across several benchmarks, Cosmos uses TransNetV2 for the splitting process.

After splitting, Cosmos applies several video filtering techniques to further refine the clips, including:

Motion filtering: Removes clips that are static or contain abrupt camera motion.

Visual quality filtering: Eliminates clips with low resolution or poor quality.

Text overlay filtering: Removes texts added during post-processing in the clip.

Video type filtering: Filters out unwanted video types.

Next, the refined collection of clips is annotated using a Visual Language Model (VLM). The annotation result is a text description of each clip’s content. Based on benchmark results, the NVIDIA team selected VILA as the VLM for generating annotations.

Once annotation is complete, the next step is deduplication. Each video is transformed into an embedding using a video embedding model called InternVideo. The collection of embeddings is then clustered, and the pairwise distance within each cluster is computed to identify highly similar videos. If duplicates are found, the video with the higher resolution is selected for the training dataset.

Tokenizer

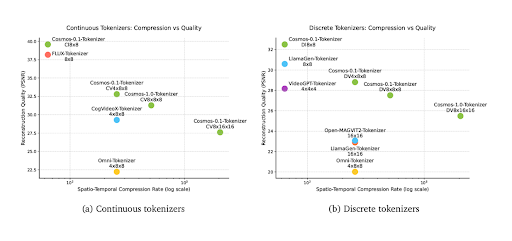

The tokenizer is a crucial component for transforming each video into its numerical representation, as AI models cannot process raw video directly. The tokenizer used in the Cosmos platform is a specialized tokenizer developed by NVIDIA called Cosmos-Tokenizer, which can produce both continuous and discrete tokenization for each video.

The ability to generate both continuous and discrete tokens is essential because, in the next stage, Cosmos offers two different model pre-training approaches: diffusion and autoregressive strategies. The diffusion strategy requires continuous tokens for training, while the autoregressive strategy relies on discrete tokens.

One key aspect of an effective tokenizer is its ability to compress high-dimensional data, such as video, into a lower-dimensional representation while minimizing quality loss. In this regard, Cosmos-Tokenizer outperforms other tokenizers such as CogVideoX-Tokenizer, FLUX-Tokenizer, and VideoGPT-Tokenizer.

Figure 4. Spatio-temporal compression rate - reconstruction quality performance between Cosmos-Tokenizer and other video tokenizers (Source)

The secret behind Cosmos-Tokenizer’s superior performance lies in its design. It employs a causal temporal design, meaning that each frame in a video is tokenized using only itself and its preceding frames. In other words, the tokenization of a frame is not influenced by any future frames.

For any input video of t seconds in length, the video is first grouped and downsampled by a factor of four using a two-level wavelet transform. For example, the first group consists of frames 1 to 4, the second group consists of frames 5 to 8, and so on. Next, a series of encoder blocks within the Cosmos-Tokenizer architecture processes the downsampled output in a causal manner to further reduce the video's dimensionality.

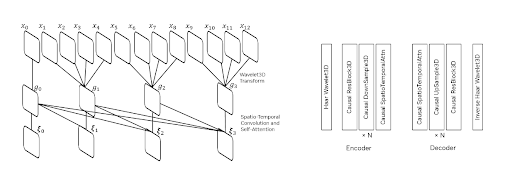

Figure 5. Temporal causality design of Cosmos-Tokenizer (Source)

Each encoder block in Cosmos-Tokenizer consists of a combination of residual layers, downsampling layers, and spatio-temporal attention layers, as shown in the visualization above. The output of the downsampling layer is passed to the spatio-temporal attention layer, which captures both spatial and temporal features of the video. First, the input undergoes a 2D convolution layer to capture spatial information, followed by a temporal convolution layer to extract temporal information.

To reconstruct the video from compressed tokens, the tokens are upsampled using a series of decoder blocks from Cosmos-Tokenizer. Each decoder block has a similar structure to the encoder block, as shown in the image above. The only difference is that instead of downsampling the input, the decoder upscales it to reconstruct the video.

Model Pre-Training I: Diffusion-based Model

The primary goal of model pre-training is to develop a base model that understands how to generate a future video that makes sense, given the current observation and previous video as inputs. There are two different pre-trained models in Cosmos: a diffusion-based model and an autoregressive-based model. In this section, we will discuss the diffusion-based model in detail.

There are two diffusion-based models in total—one with 7B parameters and another with 14B parameters. Both models are trained in a two-stage process.

In the first stage, the models receive a text prompt as input and generate a corresponding video based on that prompt. The model trained after this stage is called Text2World. In the second stage, the Text2World model is further fine-tuned using an additional input video representing the current observation. In other words, given both a text prompt and an input video, the model is trained to predict the most probable next video. The model trained after this second stage is called Video2World.

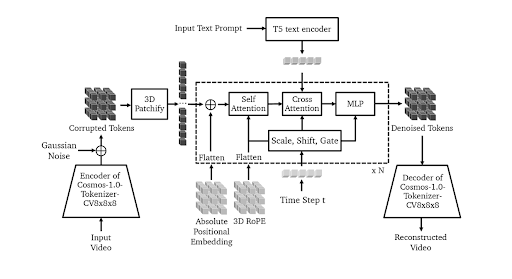

Figure 6. Pre-training architecture of Video2World diffusion-based model (Source)

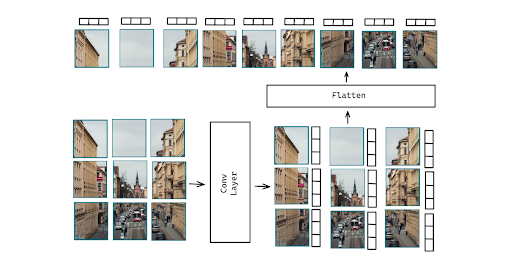

In the visualization above, we can see the overall setup for training Video2World. First, the input video representing the current observation is tokenized using the encoder of Cosmos-1.0-Tokenizer-CV8x8x8, producing continuous tokens of the input video. These tokens are then corrupted by adding Gaussian noise. Since the corrupted tokens are still high-dimensional, they need to be patchified and flattened so they can be processed by the model.

Figure 7. Example of image patchification process

The resulting tensors after the flattening process, pass through a series of Transformer blocks. In each Transformer block, the input is injected with a flattened positional embedding to add absolute positional information to each vector element and reduce training loss. Next, the output is processed by a series of attention layers, including self-attention and cross-attention layers.

In the attention layers, spatio-temporal information is integrated into the input using 3D RoPE and a time step (t). To enable the model to generate videos conditioned on a text prompt, a text embedding generated by the T5 text encoder is incorporated into the input within the cross-attention layer. Finally, the output of the cross-attention layer is passed through a linear layer, and this process repeats through multiple Transformer blocks.

The last Transformer block produces the final denoised tokens. Next, these denoised tokens need to be upsampled and decoded, which is done by the decoder of Cosmos-1.0-Tokenizer-CV8x8x8. As the final result, we obtain the predicted future video.

Model Pre-Training II: Autoregressive-based Model

The second pre-trained model is the autoregressive-based model. There are two variants of this model: one with 5B parameters and another with 13B parameters. The pre-training process also consists of two stages. In the first stage, the model is trained solely to predict future video frames based on the current video observation. In the second stage, the model is further pre-trained by incorporating text as an additional input. This means that the final model takes both an input video and a text prompt as inputs and produces a future video as output.

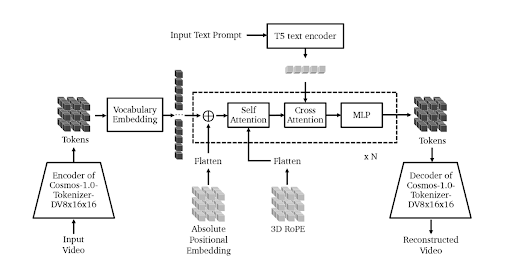

Figure 8. Pre-training architecture of Video2World autoregressive-based model (Source)

The pre-training process is quite similar to that of the diffusion-based model discussed in the previous section. First, the input video is transformed into tokens. However, unlike the diffusion-based approach, the autoregressive model requires discrete tokens instead of continuous ones. Therefore, the pre-training process uses Cosmos-1.0-Tokenizer-DV8x16x16 to convert the input video into discrete tokens. These discrete tokens are then fed into an embedding layer, which transforms them into embeddings that are ready to be processed by the next component.

Next, the input goes through a series of Transformer blocks, where each block consists of a self-attention layer, a cross-attention layer, and a linear layer. In each Transformer block, an absolute positional embedding is first added to the input to provide absolute positional information for each token.

Within the self-attention layer, 3D RoPE is used to inject information about the relative position of tokens. Meanwhile, textual information is integrated into the input within the cross-attention layer. Finally, the input passes through a linear layer at the end. This process repeats through multiple Transformer blocks until the final block is reached.

The last Transformer block produces the final token representation. To convert these tokens into a predicted video, they must be upsampled and decoded using the decoder of Cosmos-1.0-Tokenizer-DV8x16x16.

Model Post-Training

As mentioned in the previous section, the primary goal of model pre-training is to develop a base or foundation model capable of generating coherent future videos based on previous video frames and the current observation. To specialize this model in a specific area, post-training techniques, such as fine-tuning, can be applied.

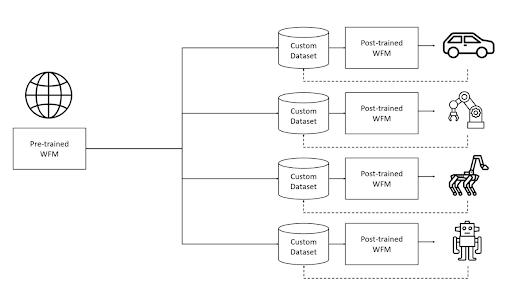

Figure 9. Illustration of how pre-trained physical AI models can be used for specific tasks with post-training (Source)

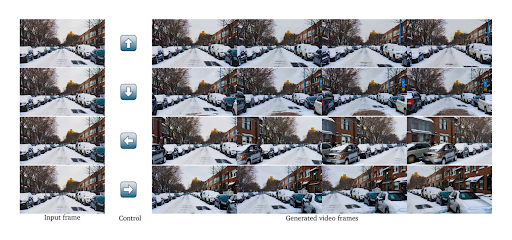

Both diffusion-based and autoregressive-based models can be fine-tuned to excel in predicting videos for specific tasks, such as camera control, robotic manipulation, and autonomous driving. Let’s take camera control as an example. Below is an example of frame-by-frame results after the model has been fine-tuned on a camera control task.

Figure 10. Example of video generated from diffusion-based model fine-tuned on a camera control task (Source)

The model fine-tuned for camera control is able to generate realistic videos based on an initial video and a control prompt, such as turning left, right, forward, or backward. The predicted video generated by the model can be used to simulate and evaluate the behavior of an AI system in the real physical world before it is actually deployed.

Guardrails

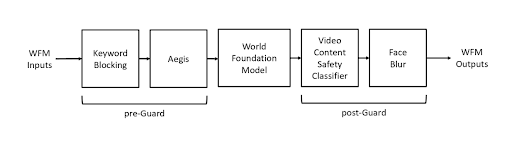

Since the model is capable of generating video content, it is crucial to implement safety measures to regulate its usage. The NVIDIA team has introduced two safety stages: pre-guard and post-guard.

Figure 11. Guardrail workflow implemented in Cosmos platform (Source)

In the pre-guard stage, an LLM-based guardrail is used to detect harmful text prompts that users might submit. If a prompt is deemed unsafe or harmful, the system prevents video generation and displays an error message instead.

Meanwhile, the post-guard stage monitors both the input video submitted to the model and the video generated by the model. To determine whether the video content is safe, the NVIDIA team uses the SigLIP model to extract embeddings from each frame, followed by classification using a simple MLP classifier.

Another aspect of the post-guard stage is the face blur filter. In this step, a face detection model called RetinaFace is used to blur parts of the frame that contain human faces to ensure privacy

Experimentation Result of Diffusion and Autoregressive-based Models

The results of both diffusion-based and autoregressive-based models after the pre-training stage have been qualitatively assessed. In this section, we’ll review some of the outputs generated by both models, starting with the diffusion-based model.

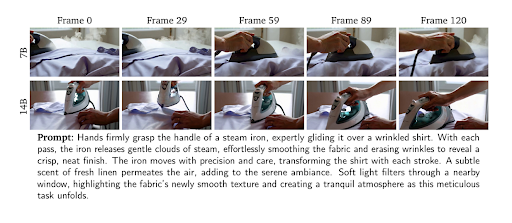

The diffusion-based model comes in two variants: 7B and 14B parameters. Overall, both models produce high-quality videos that align well with text prompt conditioning.

Figure 12. Example of generated videos from Video2World diffusion-based model with given instruction prompt (Source)

As shown in the visualization above, although both variants generate high-quality videos, the 14B model captures finer motion details within each frame more effectively. Additionally, it excels at producing videos with complex scenes and stable motion. You can view actual videos generated by the diffusion-based model on the Cosmos website.

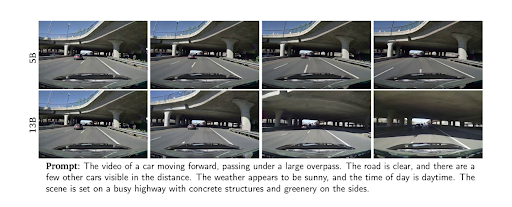

The same trend is observed in the autoregressive-based model, which also has two variants: 5B and 13B parameters. Overall, the 13B model produces sharper videos and smoother motion compared to the 5B model.

Figure 13. Example of generated videos from Video2World diffusion-based model with given instruction prompt (Source)

Although the autoregressive-based model performs well overall, it can still generate incoherent scenes during video generation. One notable failure case is the sudden appearance of random objects in the frame, as illustrated below. However, the failure rate of the 13B model across 100 test inputs is observed to be less than 2%, indicating that these errors are rare occurrences.

Figure 14. Example of a failure case from Video2World autoregressive-based model (Source)

To determine the models’ suitability for representing physical AI in the real world, the NVIDIA team conducted quantitative evaluations based on two key aspects: 3D consistency and alignment with the laws of physics.

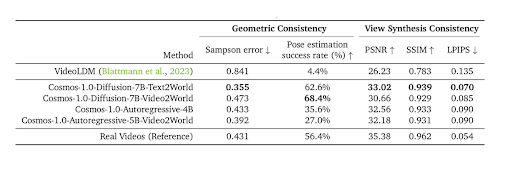

3D Consistency

Maintaining strong 3D consistency is crucial since the primary goal of the model is to simulate the behavior of physical AI in a real 3D environment. To assess 3D consistency, two sets of metrics were observed: geometric consistency (Sampson error, Pose estimation) and view synthesis consistency (PSNR, SSIM, LPIPS)

Figure 15. Evaluation of 3D consistency on Cosmos models (Source)

The results for both metrics indicate that both diffusion- and autoregressive-based models achieve significant improvements in 3D consistency compared to NVIDIA’s baseline model. This demonstrates the models’ capability to generate highly realistic 3D videos, making them valuable for real-world simulations.

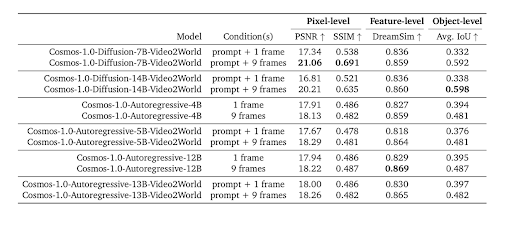

Alignment with the Laws of Physics

In addition to 3D consistency, the generated videos must also adhere to the laws of physics to ensure that the behavior of the physical AI is realistic. To evaluate this, the NVIDIA team compared simulated real videos with videos of the same scenarios generated by the model. Several metrics were used at different levels: pixel-level (PSNR, SSIM), feature-level (DreamSim), and object-level (IoU)

Figure 16. Evaluation of physical alignments on Cosmos models (Source)

Based on the pixel-level results, the diffusion-based model generally produces higher-quality videos compared to the autoregressive-based model. However, all models still face challenges in fully adhering to the laws of physics. As a result, improvements in data curation and model design are already on NVIDIA’s roadmap to enhance future model capabilities.

Conclusion

The Cosmos platform developed by NVIDIA represents a significant advancement in the integration of GenAI into the physical world. By addressing key challenges such as data scalability and safety concerns, Cosmos enables the training and fine-tuning of physical AI models in a controlled digital twin environment. With its pipeline such as video curation, tokenization, model pre-training, post-training, and guardrails, Cosmos facilitates the development of AI models that can predict future observations and generate realistic video outputs for a range of physical applications, including robotics, autonomous driving, and camera control.

While the models are able to generate high quality videos with good 3D consistency, there is a big challenge that still needs to be addressed. Further improvement in data curation and model design are needed to improve the models’ adherence to the laws of physics in the generated videos.

- What is Cosmos and Why It’s Necessary

- Components of Cosmos Platform and How They Work

- Experimentation Result of Diffusion and Autoregressive-based Models

- Conclusion

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Migrating from S3 Vectors to Zilliz Cloud: Unlocking the Power of Tiered Storage

Learn how Zilliz Cloud bridges cost and performance with tiered storage and enterprise-grade features, and how to migrate data from AWS S3 Vectors to Zilliz Cloud.

Demystifying the Milvus Sizing Tool

Explore how to use the Sizing Tool to select the optimal configuration for your Milvus deployment.

Optimizing Embedding Model Selection with TDA Clustering: A Strategic Guide for Vector Databases

Discover how Topological Data Analysis (TDA) reveals hidden embedding model weaknesses and helps optimize vector database performance.