Model Context Protocol (MCP): The Universal Interface for AI Tools

Model Context Protocol (MCP): The Universal Interface for AI Tools

Introduction

Are you tired of building custom integrations every time you want your AI assistant to interact with a new application? Do you wish there was a standardized way for AI models to communicate with various software tools? The fragmentation of AI tool integrations has been a significant roadblock to creating truly capable AI assistants that can seamlessly work across multiple applications. This is where the Model Context Protocol (MCP) comes in – revolutionizing how AI interacts with software.

What is Model Context Protocol?

Model Context Protocol (MCP) is an open standard that functions as a universal interface allowing AI models to connect to various applications and data sources consistently. Think of MCP as "USB-C for AI integrations" – a common language that enables AI assistants to communicate with different software tools without requiring custom code for each integration.

Before MCP, integrating an AI assistant with external tools was like having appliances with different plugs and no universal outlet. Each integration required its own custom implementation, creating a fragmented ecosystem where scaling was difficult and maintenance was a nightmare. MCP solves this by offering one common protocol for all these interactions, dramatically simplifying the integration landscape.

How It Works

The Architecture of MCP

MCP follows a client-server architecture specifically designed for AI-to-software communication:

MCP Clients: Components within AI assistants (like Claude or Cursor) that maintain connections to MCP servers. The client handles communication and presents server responses to the AI model.

MCP Servers: Lightweight adapters that run alongside specific applications or services. An MCP server exposes an application's functionality in a standardized way, acting as a translator between natural-language requests from AI and specific actions in the application.

The MCP Protocol: The language and rules that clients and servers use to communicate. It defines message formats, how servers advertise available commands, how AI issues commands, and how results are returned.

Services (Applications/Data Sources): The actual apps, databases, or systems that MCP servers interface with. These can be local (e.g., file system, running applications) or remote (e.g., cloud services like GitHub or Slack).

Key Components of MCP Servers

MCP servers perform several critical functions that enable seamless AI-application interactions:

Tool Discovery: MCP servers describe what actions or capabilities the application offers, so the AI knows what it can request.

Command Parsing: Servers interpret natural-language instructions from the AI into precise application commands or API calls.

Response Formatting: Servers take output from the application and format it in a way the AI model can understand (usually as text or structured data).

Error Handling: Servers catch exceptions or invalid requests and return useful error messages for the AI to adjust its approach.

Technical Implementation

At a technical level, MCP leverages several important components:

Transport Layer: MCP is transport-agnostic, supporting HTTP/WebSockets for remote connections or standard IO streams (stdin/stdout) for local integrations.

JSON Schema: MCP uses JSON Schema for definitions within the protocol, providing a structured way to describe available tools and their parameters.

APIs: MCP servers typically leverage existing application APIs to execute commands requested by the AI.

Comparison

MCP vs. Function Calling

While both MCP and function calling (like OpenAI's function calling) enable AI to use tools, they differ significantly:

| Feature | MCP | Function Calling |

|---|---|---|

| Standardization | Open standard usable by any AI model | Often specific to a particular AI provider |

| Scope | Universal protocol for connecting to any app | More limited, typically for predefined functions |

| Discovery | Dynamic tool discovery | Functions typically predefined in prompt |

| Integration | One protocol for all tools | Custom integration for each tool |

| Ecosystem | Growing ecosystem of shared servers | Less standardized sharing of implementations |

MCP vs. Plugins/Extensions

Traditional plugin systems differ from MCP in several key ways:

| Feature | MCP | Traditional Plugins |

|---|---|---|

| Focus | Designed specifically for AI interaction | Designed for direct human interaction |

| Language | Natural language as the interface | Often requires learning plugin-specific commands |

| Flexibility | One AI can use any MCP-compatible tool | Plugins often model-specific or app-specific |

| Implementation | Standardized protocol | Varied implementation approaches |

Benefits and Challenges

Benefits of MCP

Reduced Integration Complexity: Instead of building N×M integrations (N tools times M AI models), MCP creates a single protocol that connects everything.

Future-Proof Investments: Building an MCP server for your application ensures compatibility with any AI that speaks MCP, not just today's models.

Dynamic Tool Discovery: AI can discover what operations are possible with a tool in real-time, rather than having hardcoded capabilities.

Composable Workflows: MCP enables AI to chain actions across multiple tools, creating sophisticated workflows that span applications.

Vendor-Agnostic Development: You're not locking into one AI provider's ecosystem or toolchain.

Challenges and Limitations

Security Concerns: MCP gives AI capabilities within your system, requiring careful management of permissions and authentication.

Fragmented Adoption: Not all AI platforms or models currently support MCP out-of-the-box.

Reliability Issues: AI might misuse tools or get confused if the task is complex, requiring careful prompt engineering.

Performance Overhead: Each MCP call is an external operation that might be slower than the AI's internal inference.

Lack of Multi-step Transactionality: Current MCP implementations don't support atomic operations across multiple actions.

Four Powerful MCP Tools for Your Agents

At Zilliz, we've been building MCP tools that strengthen the memory side of agent infrastructure—projects that help models understand codebases, interact with data, and ground their reasoning in actual context.

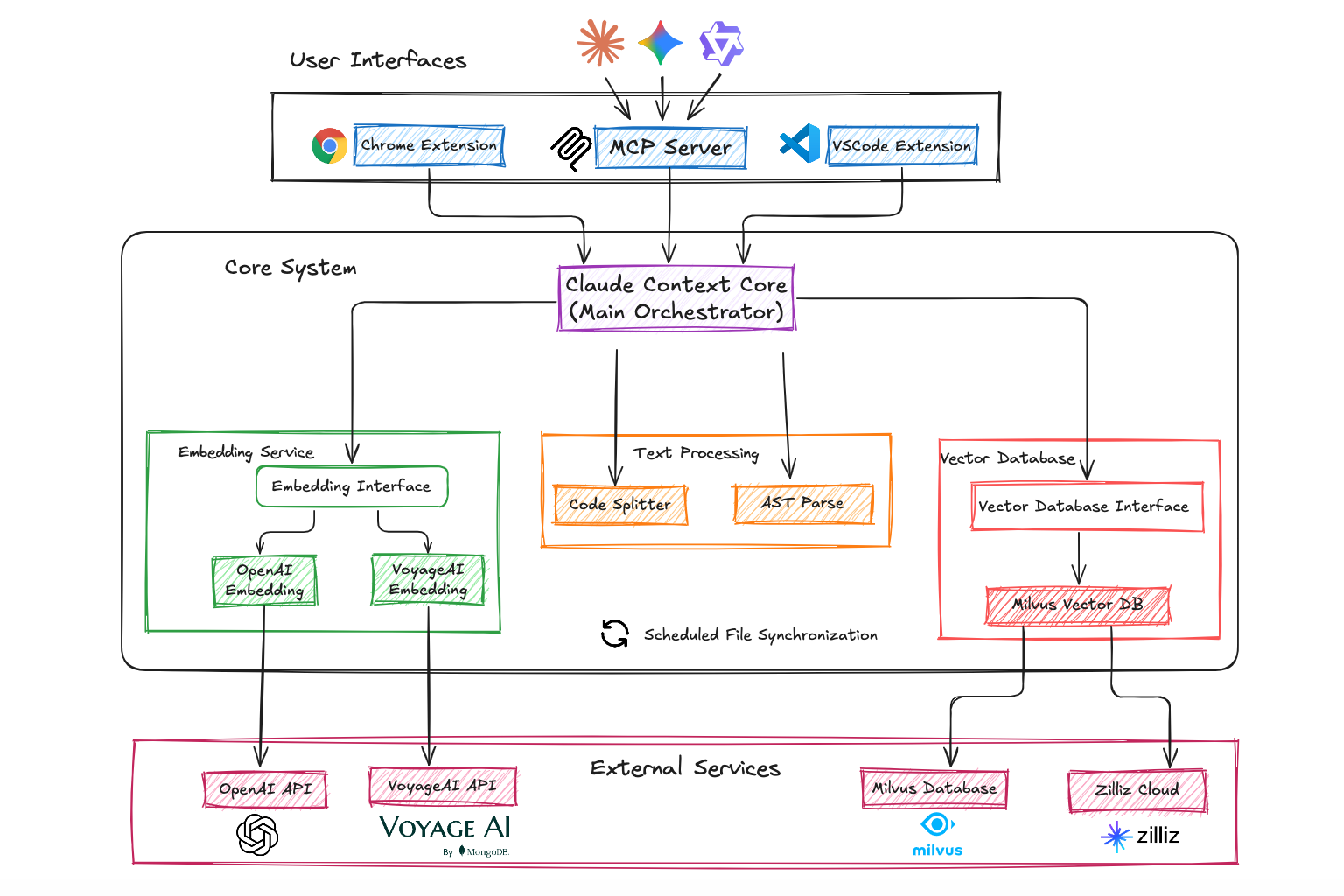

1. Claude Context: Adds Semantic Code Search to Claude Code

Most AI coding tools, such as Claude Code and Gemini CLI, have a context problem and struggle with real-world codebases because they don’t actually see your code. Claude Context changes that. Claude Context (previously known as Code Context) is an open-source MCP plugin that adds semantic code search to Claude Code and many other AI coding agents, turning your entire repo into a searchable, navigable memory space.

mcp1.png

mcp1.png

GitHub repo: https://github.com/zilliztech/code-context

Tutorial & Blog:

2. Zilliz MCP Server: Natural Language Access to VectorDB Operations

Instead of forcing developers to write vector database queries manually, this Zilliz MCP server lets you interact with Zilliz Cloud conversationally, directly inside AI-native environments like Claude, Cursor, and Windsurf. No switching between interfaces, tools, or writing manual queries. You can ask questions like “show me where this function is used” or “Create a vector collection with 512 dimensions for image embeddings,” and the server handles the rest. It becomes a memory interface over your file system, shell, and dev environment, safely exposed through MCP.

mcp2.png

mcp2.png

GitHub repo: zilliz.com/blog/introducing-zilliz-mcp-server

Blog & Demos: https://zilliz.com/blog/introducing-zilliz-mcp-server

Step by step guie: https://github.com/zilliztech/zilliz-mcp-server/blob/master/docs/USERGUIDE.md

Milvus MCP Server: Open-Source Vector Memory

Milvus MCP Server brings open-source Milvus into the MCP ecosystem. It exposes collection management, vector search, and data ingestion as structured tools that LLMs can discover and use. It turns Milvus into a first-class tool in the model’s action space, enabling natural-language RAG pipelines, semantic search over embeddings, and conversational data interaction—all without needing to write raw code or manage SDKs manually.

Whether you’re building internal agents or embedding Milvus access into IDEs, this server adds a robust vector memory layer to your agent architecture.

👉GitHub repo: github.com/zilliztech/mcp-server-milvus

Milvus SDK Code Helper: Always-Current Code Generation

AI coding assistants often generate outdated code because they're trained on old documentation. Milvus SDK Code Helper is an MCP server that uses RAG with MCP to ensure code suggestions are always grounded in the latest official guidance. When your AI suggests Milvus code, it's using real-time context from current documentation, not stale training data.

Read this blog for more details or get started with this Code Helper following this user guide.

FAQs

1. What's required to implement MCP in my application?

Implementing MCP requires creating an MCP server for your application that exposes its functionality through the protocol. This typically involves identifying your application's control points (API, scripting interface, etc.), using an MCP SDK to scaffold the server, defining available tools, implementing command parsing and execution, and setting up communication channels. Anthropic and others provide SDKs in multiple languages (TypeScript, Python, Java, etc.) to simplify this process.

2. How does MCP handle security and permissions?

Currently, MCP security is primarily implemented at the server level rather than in the protocol itself. Server developers must build in authentication, authorization, and permission checks. Many current implementations are designed for local, trusted environments and may use API keys or tokens for remote scenarios. The community recognizes the need for standardized security mechanisms in future versions of the protocol.

3. Can any AI model use MCP, or is it limited to specific ones?

MCP is designed as an open standard that any AI model can implement. Claude (by Anthropic) has native support, and tools like Cursor and Windsurf have added support. For other models, there are adapters being developed (such as LangChain's MCP integration). As adoption grows, we can expect more AI platforms to support MCP directly.

4. How does MCP compare to OpenAI's function calling?

While both enable AI to use tools, MCP is an open, universal standard designed to connect any AI to any application through a consistent protocol. Function calling is typically provider-specific and less standardized across the ecosystem. MCP also provides richer capabilities for tool discovery and more flexibility in integration patterns.

5. What's on the horizon for future MCP development?

The future of MCP will likely include formalized security mechanisms (standardized authentication/authorization), MCP gateways (unified endpoints for multiple services), optimized AI agents specifically designed for MCP interactions, more applications with native MCP support, and enhanced agent reasoning for complex multi-tool tasks. As the ecosystem matures, we can expect MCP to become a fundamental layer in AI-software interactions.

- Introduction

- What is Model Context Protocol?

- How It Works

- Comparison

- Benefits and Challenges

- Four Powerful MCP Tools for Your Agents

- FAQs

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for Free