Supercharging AI Agents: How Rexera Transforms Real Estate Closings with Zilliz Cloud

40% increase

in retrieval accuracy through hybrid search

50% Lower Cost

by eliminating Elasticsearch and switching from self-hosted vector databases to Zilliz Cloud’s fully managed platform

Real-time transaction processing

is enabled by Zilliz Cloud's exceptional latency performance

Reduced complexity and management overhead

by eliminating the need for a separate Elasticsearch implementation

Working with Zilliz Cloud has been transformative for our AI agent architecture. The hybrid search capability alone delivered a 40% accuracy improvement, and the scalability means we never worry about performance, even during the highest traffic periods. It's been essential to our continued growth.

Sasidhar Janaki

About Rexera

Rexera isn’t just using AI agents—they’ve operationalized them at scale to handle one of the most complex, document-heavy workflows in real estate: the closing process. With AI agents like Iris managing over 10,000 tasks daily and processing millions of pages per month, Rexera has turned what was once a manually intensive and delay-prone operation into an intelligent, near-real-time system.

But scaling AI agents in production isn’t just about LLM prompts—it’s about architecture. Real-time document intelligence requires fast, accurate retrieval and autonomous coordination across agents that extract, validate, and communicate—all powered by constantly updated data and infrastructure that can spike to millions of simultaneous requests without falling over. That’s why Rexera turned to Zilliz Cloud, a vector database purpose-built for AI workloads, to power the core of its agent ecosystem.

From Iris, which extracts and validates critical data, to Mia and Ria, which automate communications, Rexera’s agents rely on Zilliz Cloud to deliver the most relevant context—instantly—through hybrid search. The result? A 40% boost in retrieval accuracy, elimination of brittle infrastructure, and a major leap forward in how real estate transactions are closed.

Trusted by 350+ real estate firms, Rexera is redefining how deals get done—with AI that actually works at scale.

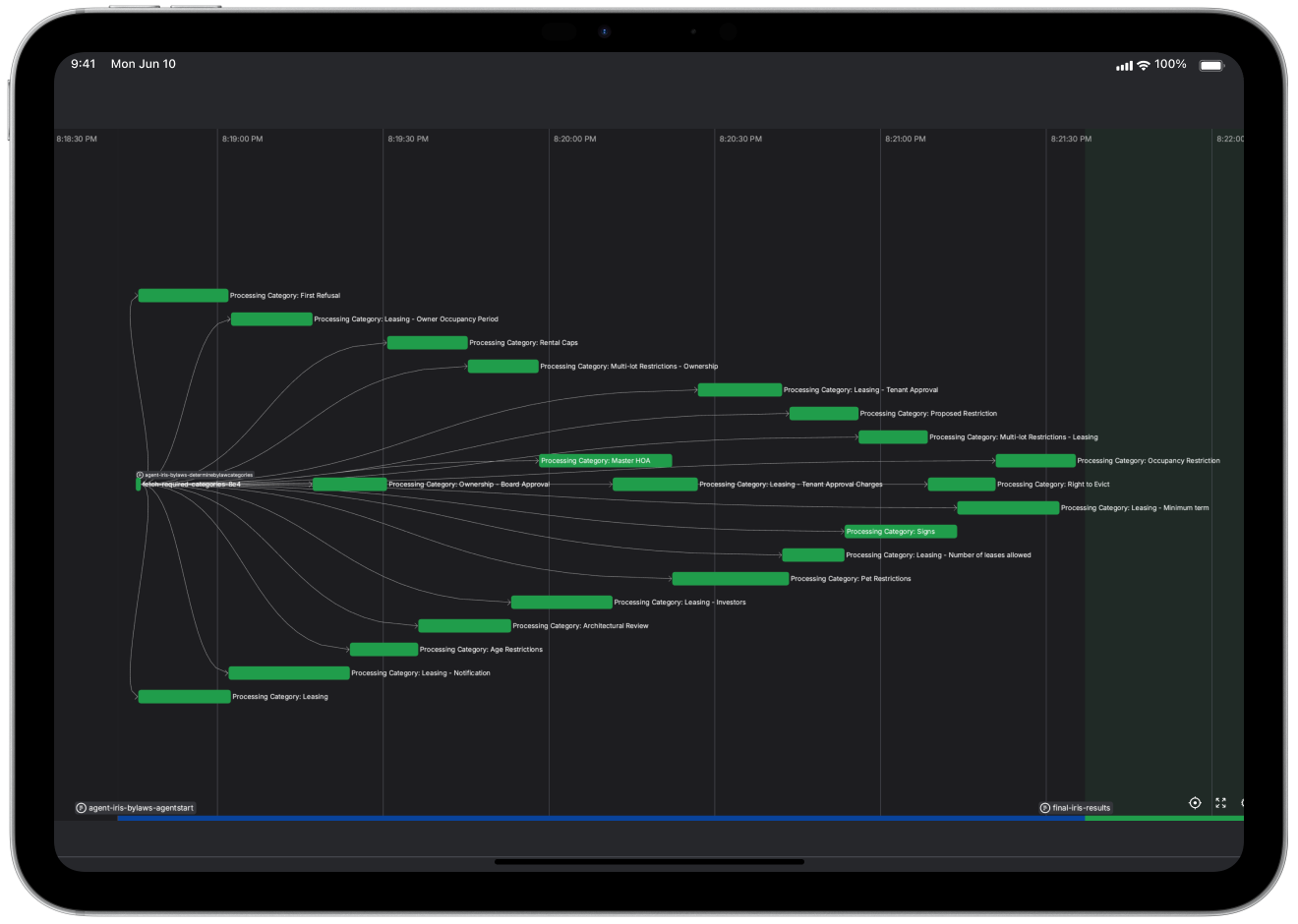

Rexera's ai agents.png

Rexera's ai agents.png

The Challenge: Scaling Real-Estate Document Intelligence

As Rexera's business expanded, they encountered several critical challenges in their document processing pipeline.

When Rexera first launched, they processed relatively simple documents—invoices and certificates typically under 10 pages, which could be fed directly into large language models (LLMs). However, business growth soon required them to analyze comprehensive real estate documentation spanning thousands of pages, far exceeding the context window limitations of available LLMs.

Their initial vector database solution, Deep Lake, stored embeddings on S3 buckets but suffered from significant performance bottlenecks. The system would download entire vector sets to their server before performing similarity calculations, creating unacceptable latency. This was unacceptable for their growing business, and they urgently needed a new vector search solution.

Initially, Rexera explored self-hosting Milvus on their Kubernetes cluster, and it worked very well. But they recognized the operational complexity of maintaining a production vector database environment. Managing the infrastructure required specialized engineering resources, particularly for handling elastic scaling during traffic spikes when millions of requests arrived simultaneously. Their data retention policies, which required deleting embeddings for completed transactions, added another layer of operational complexity. The team realized they needed a solution that would eliminate these infrastructure management burdens while maintaining high performance.

"We were facing latency issues and scaling challenges with our previous solutions," explains Sasidhar Janaki, a Senior Software Engineer at Rexera who has been with the company since its inception. "When traffic spiked with millions of customer requests, our self-hosted infrastructure couldn't keep up, and document retrieval was taking too long."

The Solution: Powering AI Agents with Zilliz Cloud Vector Database

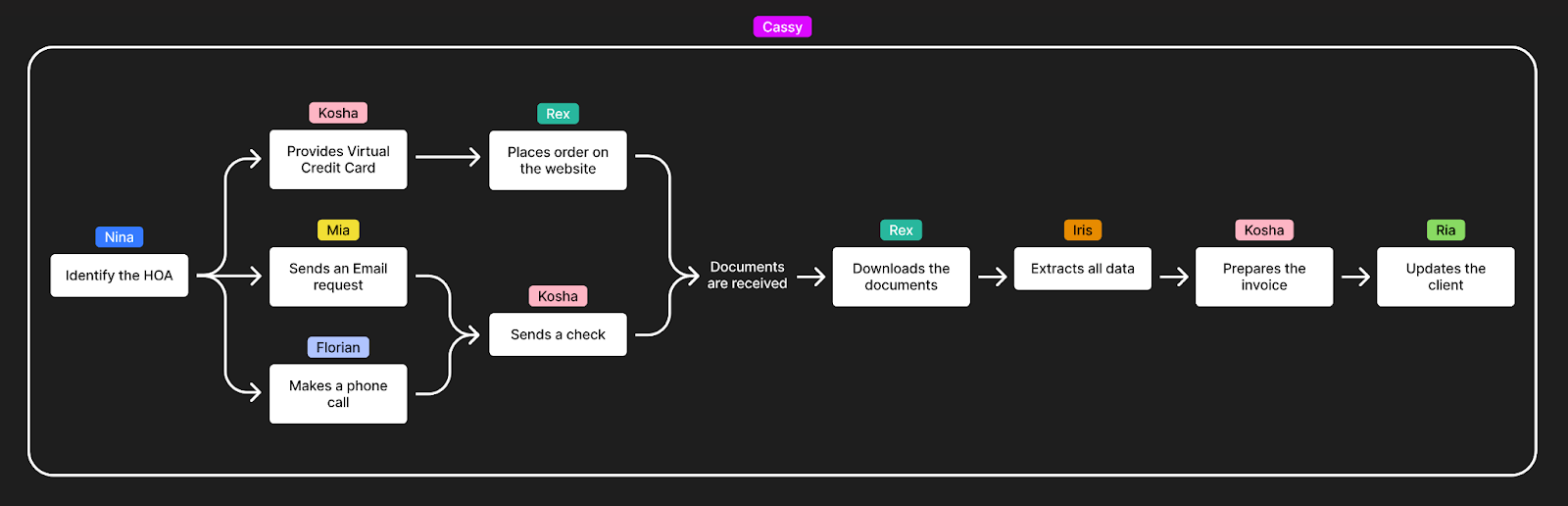

After a comprehensive evaluation of multiple vector database options, including Weaviate and Chroma, Rexera selected Zilliz Cloud as the foundation for their document intelligence system primarily for its superior latency, scalability, and hybrid search capabilities. Zilliz Cloud became the central knowledge repository powering multiple AI agents in their ecosystem, such as:

Iris – Extracts and validates data from complex real estate documentation

Mia – Generates intelligent email auto-replies based on communication history

Ria – Provides automated SMS responses to keep all parties informed

Figure: An example of how our AI agents work together to revolutionize HOA document acquisition

For each transaction, Rexera generates embeddings for thousands of pages using OpenAI's text-embedding-3-large model and stores them in Zilliz Cloud. These embeddings are continuously updated as document content changes, ensuring the system always works with the most current information.

When an agent needs to retrieve information, it leverages Zilliz Cloud's hybrid search capability to find the most relevant content from their vast document repository. This retrieved context is then processed by multiple LLMs, including ChatGPT and Claude, with answers ranked to determine whether human intervention is required.

The introduction of hybrid search within Zilliz Cloud was particularly transformative, as it allowed Rexera to combine vector similarity with traditional keyword search in a single platform, eliminating their previous need to maintain two separate search infrastructures.

Implementation and Architecture

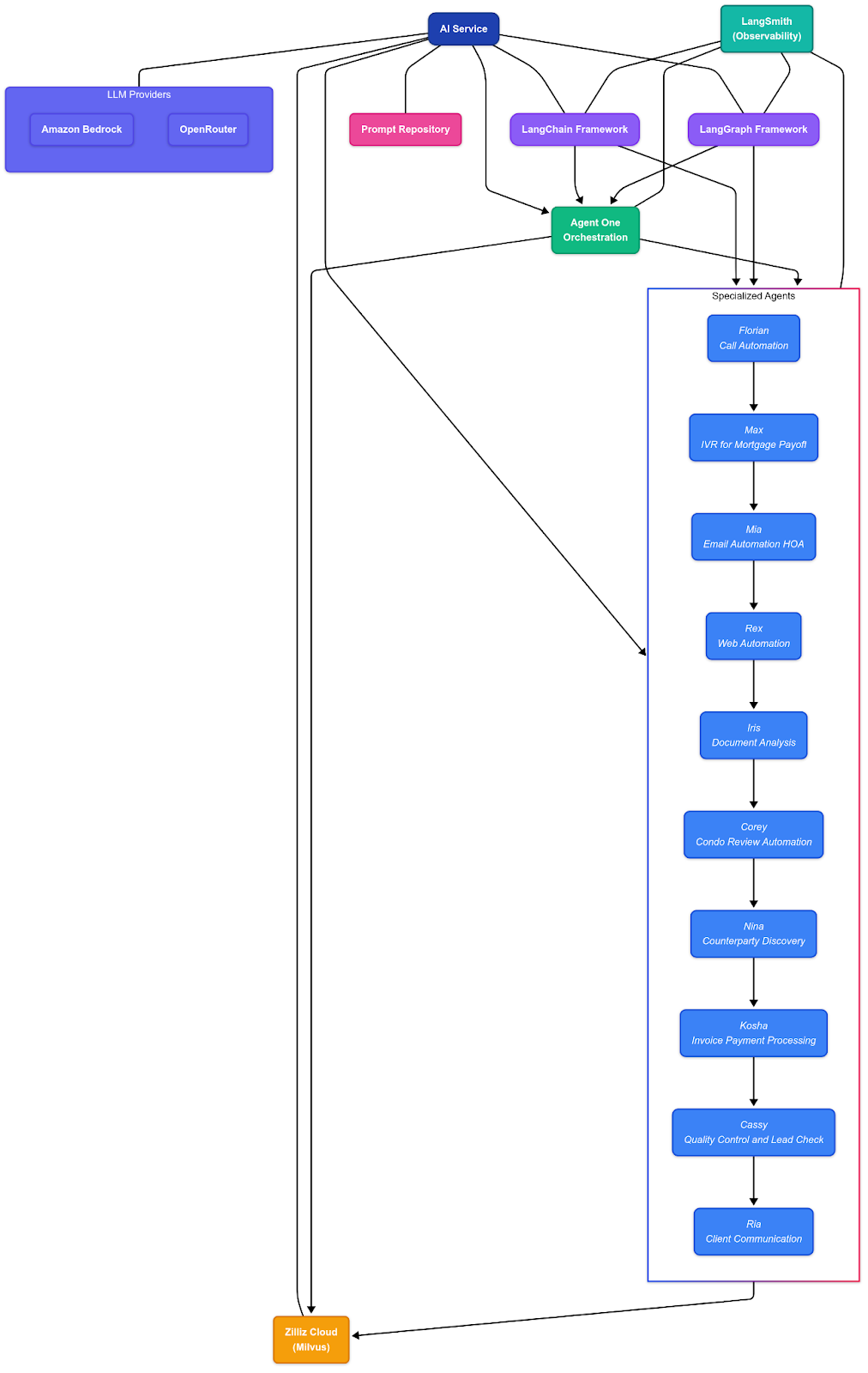

Rexera’s AI agent architecture is a modular, production-grade system built for transaction automation at scale. At its core is Agent One, a centralized orchestrator powered by LangChain and LangGraph frameworks, with observability provided by LangSmith. Zilliz Cloud plays a critical role in this ecosystem, serving as the high-performance vector database that enables retrieval-augmented reasoning across all agents.

Rexera's integration with Zilliz Cloud and other AI tools follows a streamlined workflow:

Figure: How Zilliz Cloud supports Rexera’s AI Agents system

Document Ingestion + Embedding: As new transaction documents arrive—from mortgage payoffs to HOA emails—Rexera uses OpenAI or AWS Bedrock models (via OpenRouter) to encode documents into vector embeddings. These embeddings, along with metadata like order ID, document type, and organization ID, are stored in Zilliz Cloud.

Agent Orchestration: Specialized agents are composed and orchestrated using LangChain’s composable tooling and LangGraph's dynamic workflows. LangSmith provides observability across the chain. This layered structure allows fine-tuned, explainable agent behavior across tasks like call automation, web automation, document analysis, and more.

Contextual Retrieval for AI Agents via Hybrid Search: When an agent (e.g. Max, Mia, Iris) needs context to make a decision or generate output, Agent One leverages Zilliz Cloud’s hybrid search—combining vector similarity search, full-text search, and structured metadata filtering—to retrieve the most relevant content across thousands of pages in milliseconds.

Multi-Model Verification: For critical transactions, the retrieved context is passed through multiple LLMs, such as Claude or OpenAI GPT models, to verify accuracy and ensure robust, multi-perspective understanding.

Streaming Updates to Zilliz Cloud: As documents evolve through the transaction lifecycle, updated embeddings are continuously streamed into Zilliz Cloud, ensuring retrieval results reflect the latest status.

This robust, Zilliz Cloud-powered system enables Rexera to run a fleet of AI agents that automate high-stakes concurrent transactions with speed, accuracy, and explainability.

Why Rexera Chose Zilliz Cloud

Rexera's evaluation process included Deep Lake, Weaviate, Chroma, self-hosted Milvus, and many other vector database options. Several decisive factors led them to select Zilliz Cloud:

Superior latency performance was the primary consideration. Zilliz Cloud delivered the exceptional response times required for both document retrieval and uploads, enabling near real-time transaction processing.

Seamless scalability proved critical for Rexera's business. Zilliz Cloud handles traffic spikes when millions of customer requests arrive simultaneously without the scaling issues they experienced with self-hosted solutions.

Hybrid search capability became a game-changer for Rexera's document processing. This feature increased their retrieval accuracy by 40% compared to traditional embedding-based search and eliminated the need to maintain separate databases for different search types.

Developer-friendly workflow enhances productivity. Engineers can quickly spin up local Milvus Docker containers for testing, then seamlessly connect to Zilliz Cloud in production environments.

"When Zilliz announced hybrid search would be included in the platform, we were incredibly excited," recalls Sasidhar. "We got early access about two months before the public release, and it was transformative. We eliminated Elasticsearch entirely and now use Zilliz Cloud for both vector and full-text search."

Results and Benefits of Switching to Zilliz Cloud

Rexera’s move to Zilliz Cloud delivered immediate performance wins and long-term architectural advantages:

40% Increase in Retrieval Accuracy: Hybrid search offered by Zilliz Cloud ensures AI agents always get the right context, even across thousands of pages, boosting precision across transactions.

50% Lower Total Cost: By eliminating Elasticsearch and switching from self-hosted vector databases to Zilliz Cloud’s fully managed platform, Rexera cut infrastructure costs nearly in half.

30% Better Latency Than Alternatives: Compared to other vector databases Rexera evaluated and tried, Zilliz Cloud consistently delivers faster query responses, even under production loads.

Single Search Stack: By replacing Elasticsearch, Rexera reduced operational overhead and simplified their infrastructure.

Faster Development Cycles: Engineers now spin up Milvus containers locally and push directly to Zilliz Cloud in production—no bottlenecks, no surprises.

Real-Time Response at scale: Zilliz Cloud’s sub-second latency enables real-time decision-making for clients, even during peak request volumes.

Zero Infrastructure Management: No more scrambling to scale or maintain vector infrastructure—Zilliz Cloud handles it, so Rexera can focus on innovation.

Future Plans

Rexera is just getting started. As demand from enterprise customers grows, the team plans to:

Enable Full Multi-Tenancy: While metadata-based separation works today, Rexera will adopt Zilliz Cloud’s multi-tenancy features to support strict data isolation for large clients.

Continuously Evaluate New Models: Embedding and LLM models are constantly benchmarked against real-world workloads to ensure top-tier performance.

Expand Agent Capabilities: With scalable vector search as the foundation, Rexera is exploring new agent behaviors—from dynamic summarization to predictive compliance workflows.

Conclusion

Rexera’s AI agent ecosystem shows what’s possible when vector infrastructure is built for scale—not stitched together. By standardizing on Zilliz Cloud, they’ve unlocked real-time intelligence across the closing process, eliminated brittle infrastructure, and gained a reliable path to expand.

“Hybrid search gave us a 40% accuracy lift. We eliminated Elasticsearch. And we don’t think twice about scaling. Zilliz Cloud made all of that possible.”

—Sasidhar Janaki, Senior Software Engineer, Rexera

We were facing latency issues and scaling challenges with our previous solutions. When traffic spiked with millions of customer requests, our self-hosted infrastructure couldn't keep up, and document retrieval was taking too long.

Sasidhar Janaki