Credal AI Unlocks Secure, Governable GenAI with Milvus

Flexible Scaled

with separated compute and storage

Enhanced

query precision with hybrid search

Accelerated feature deployment

with the leading open-source community

When you're engineering a solution as complex as ours, you're not just ticking boxes—you're looking for that sweet spot where all your must-haves intersect. Think of it as an eight-circle Venn diagram; while many databases met one or two of our criteria, Milvus was the only one sitting right at the intersection of all eight. It checked every single box for us—something no other solution managed to do.

Jack Fischer

About Credal AI

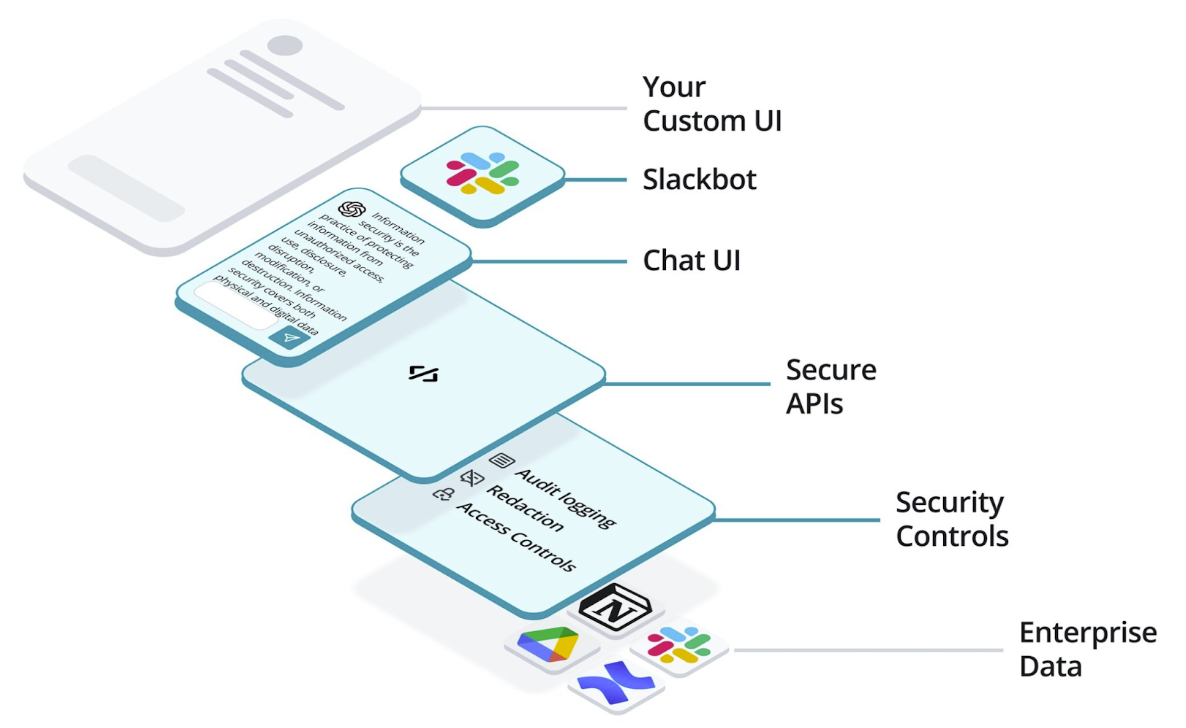

Credal AI aims to make GenAI safe and accessible for enterprises. They provide robust data integration and governance features, pulling data from various platforms such as Microsoft Office, Google Workspace, and Slack. They offer an "Okta for AI," where access and permissions are rigorously controlled, aiming to give developers and end-users a streamlined and secure interface. Credal AI thus serves as an end-to-end solution for secure, enterprise-grade GenAI deployment.

Navigating Scale and Complexity: The Challenges

Credal AI faced several challenges as it began its product development. A critical feature was semantic search, which proved difficult to implement at scale using essential vector search plugins. These challenges were exacerbated when semantic search was embedded into custom workflows that required high database performance. Traditional search mechanisms needed to be improved for constructing these GenAI-driven workflows, which needed to process large datasets in real time based on intricate, user-defined criteria.

Moreover, their diverse hosting environments, including cloud-based and on-prem setups, necessitated a vector database that could be self-hosted and supported by an active open-source community. They needed a fast, scalable, and versatile database to handle complex data pipelines and varied hosting conditions.

Choosing Milvus: A Developer-Centric Vector Database That Checks All the Boxes

After a comprehensive evaluation, Credal AI concluded that Milvus running on Amazon EKS was the winner for their vector database needs. What stood out immediately was the active open-source community around the project. This wasn't a 'nice-to-have' but a critical factor for fast issue resolution and ongoing feature development. Additionally, the high GitHub star count was more than a vanity metric but a tangible sign of an engaged and vibrant developer community, adding a layer of trust in the solution's longevity.

Another critical consideration was Milvus' official Helm Chart for Kubernetes. While creating a custom Helm Chart wasn't a deal-breaker, an officially supported chart was a testament to Milvus' commitment to developer success. It suggested that the Milvus team wasn't just after short-term revenue but was genuinely invested in solving real-world user challenges. This support was invaluable for a startup like Credal AI, especially since it allowed them to streamline their deployment process, saving time and engineering resources.

The features of Milvus also caught their attention, particularly the availability of hybrid search. The ability to perform vector searches while filtering other metadata was a critical need. Many solutions offered fast vector search but were limited to extending this with structured data. Milvus' hybrid search capability was the missing puzzle piece that fulfilled their technical requirements and solved actual business problems.

Last but not least, the architecture of Milvus, which separates storage and compute, provided the scalability and flexibility Credal AI was seeking. This design choice was not just some architectural notion; it was fundamental to their confidence in scaling the product in the future. It allowed them to evolve their access patterns as they fine-tuned their application. When it came to the 'must-haves' for their vector database—the performance, the features, the community, and the architecture—Milvus checked all the boxes.

Result: Real-world Scalability and Flexibility with Milvus

Implementing Milvus on Amazon EKS has been a game-changer for Credal AI, providing a robust backbone for their complex vector search requirements. Milvus' technical capabilities quickly assuaged their initial uncertainties about how the database would perform. In a landscape where startups often deal with evolving needs, Milvus proved to be an immediate solution and a long-term asset.

The decision to choose Milvus was validated by its seamless integration into Credal AI's existing systems, fulfilling technical prerequisites and broader business objectives. The platform's capacity for high scalability—thanks to its decoupled storage and compute architecture—gave Credal AI the confidence to anticipate and adapt to emerging customer needs. The company can now focus on what it does best: iterating its core product and driving user engagement. Rest assured that its backend can handle whatever it throws at it.

Forging Ahead: Future Plans with Milvus

Credal AI is considering a future collaboration with Zilliz, the maintainer of Milvus, to utilize Zilliz Cloud for its cloud-based clients. This aligns well with the team's ongoing mission to bring GenAI functionality into real-world enterprise applications, a complex task requiring robust security measures and comprehensive data governance. Leveraging Zilliz Cloud on AWS, a fully managed version of Milvus, fits neatly into this broader strategy, promising to streamline operations and enhance service offerings for their cloud clients.

Meanwhile, Milvus remains a central piece in Credal AI's strategy. As they push full throttle on all fronts to make GenAI operational for enterprises, the scalability and flexibility offered by Milvus are more invaluable than ever. Their relationship with Milvus is not viewed as a one-off solution but a long-term partnership geared toward meeting evolving challenges and scaling new heights.