OpenAI RAG vs. Your Customized RAG: Which One Is Better?

This post was originally released on The New Stack and is reposted here with permission.

The recent introduction of the OpenAI Assistants retrieval feature has sparked significant discussions in the AI community. This built-in feature incorporates the Retrieval Augmented Generation (RAG) capabilities for question-answering, allowing GPT language models to tap into additional knowledge to generate more accurate and relevant answers. In a previous post, we explored the constraints of OpenAI's built-in RAG retrieval and discussed situations where creating a customized retrieval solution might be beneficial.

In this post, we'll dive deeper into this topic by comparing the performance of OpenAI's RAG with a customized RAG built on a vector database like Milvus. We'll assess each approach's strengths, weaknesses, and nuances, ultimately answering the crucial question: which is better?

What is Retrieval Augmented Generation (RAG)?

Before we evaluate OpenAI's built-in RAG vs. Customized RAG systems, let's start by understanding what RAG is.

RAG, or Retrieval Augmented Generation, is an AI framework that retrieves facts from an external knowledge base to ensure that large language models (LLMs) are grounded in the most accurate and up-to-date information. A typical RAG system comprises an LLM, a vector database like Milvus, and some prompts as code.

RAG evaluation tool: Ragas

Evaluating the RAG performance is a complex task. We need a fair and objective RAG evaluation tool to assess multiple metrics on a suitable dataset, ensuring reproducible results.

Ragas is an open-source framework dedicated to evaluating the performance of RAG systems. It provides a range of scoring metrics that measure different aspects of an RAG system to offer a comprehensive and multi-angle evaluation of the quality of RAG applications.

This post will use faithfulness, answer relevancy, context precision, answer similarity, and answer correctness as the primary metrics to assess OpenAI RAG and our customized RAG systems.

Faithfulness: Assessing the factual accuracy of the generated answer in the given context.

Answer Relevancy: Evaluating the relevance of the generated answer to the question.

Context Precision: Signal-to-noise ratio in the retrieved context.

Answer Correctness: Assessing the accuracy of the generated answer compared to the ground truth.

Answer Similarity: Evaluating the semantic resemblance between the generated answer and the ground truth.

See the Ragas documentation for more detailed information about Ragas and all the metrics.

RAG evaluation dataset: FiQA

We've selected the Financial Opinion Mining and Question Answering (FiQA) dataset for our evaluation. This dataset has several characteristics that make it ideal for our assessment, including:

It contains highly specialized financial knowledge unlikely to be present in the training data of GPT models.

It was initially designed to assess Information Retrieval capabilities and thus provides well-annotated knowledge snippets that serve as standard answers (ground truth).

Ragas and its community have widely recognized FiQA as a standard introductory test dataset.

Setting up the RAG systems

Now, let's build the two RAG systems for comparison: an OpenAI RAG and a Customized RAG built on the Milvus vector database.

Setting up OpenAI RAG

We'll build an RAG system using OpenAI Assistants by following OpenAI's official documentation, including constructing the Assistants, uploading the external knowledge, retrieving contextual information, and generating answers. All the other settings will remain default.

Setting up a customized RAG using Milvus

We must manually set up the custom RAG system using the Milvus vector database to store the external knowledge. We'll use `BAAI/bge-base-en` from HuggingFace as the embedding model, with various LangChain components for document import and agent construction.

For detailed information, see this step-by-step guide.

Configuration comparison

We summarize the configuration details of the two RAG systems in the table below. For more information, see our implementation code.

| OpenAI RAG | Customized RAG | |

|---|---|---|

| LLM model | gpt-4-1106-preview | gpt-4-1106-preview |

| Vector DB | Not Disclosed | milvus |

| Embedding model | Not Disclosed | BAAI/bge-base-en |

| Chunk size | Not Disclosed | 1000 |

| Chunk overlap | Not Disclosed | 40 |

| topk | Not Disclosed | 5 |

| Use Agent | Yes | Yes |

Table: Configuration Comparison of OpenAI RAG and Customized RAG

As is shown in the table above, both RAG systems use `gpt-4-1106-preview` as the LLM model. The customized RAG uses Milvus as the vector database. However, OpenAI RAG doesn’t disclose its built-in vector database or other configuration parameters.

Evaluation results and analysis

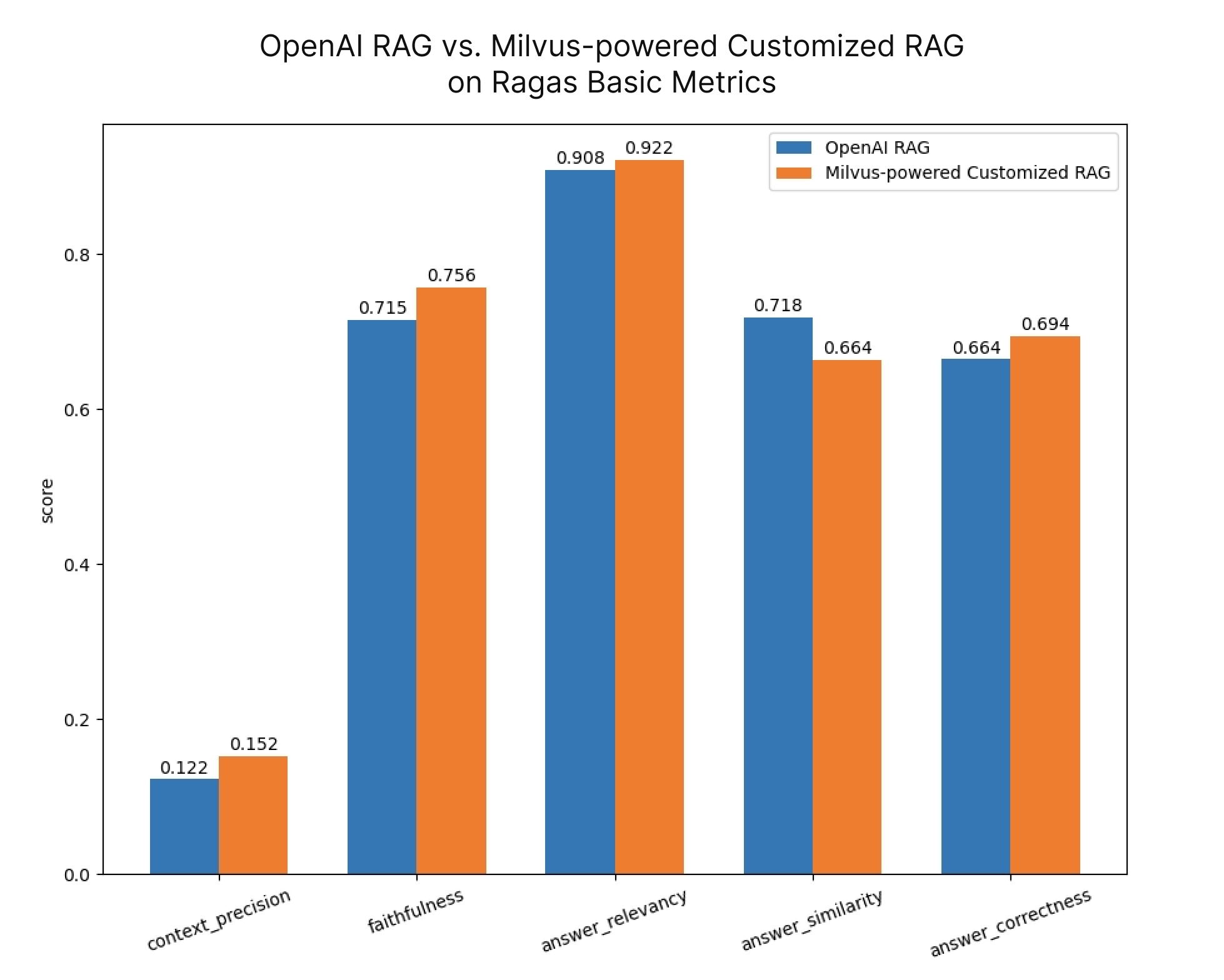

Using Ragas, we scored both RAG systems on multiple metrics, including context precision, faithfulness, answer relevancy, similarity, and correctness. The chart below shows the experimental scores we got from Ragas.

While the OpenAI RAG system slightly outperformed the Milvus-powered customized RAG system in answer similarity, it fell behind in other crucial metrics, including context precision, faithfulness, answer relevancy, and answer correctness.

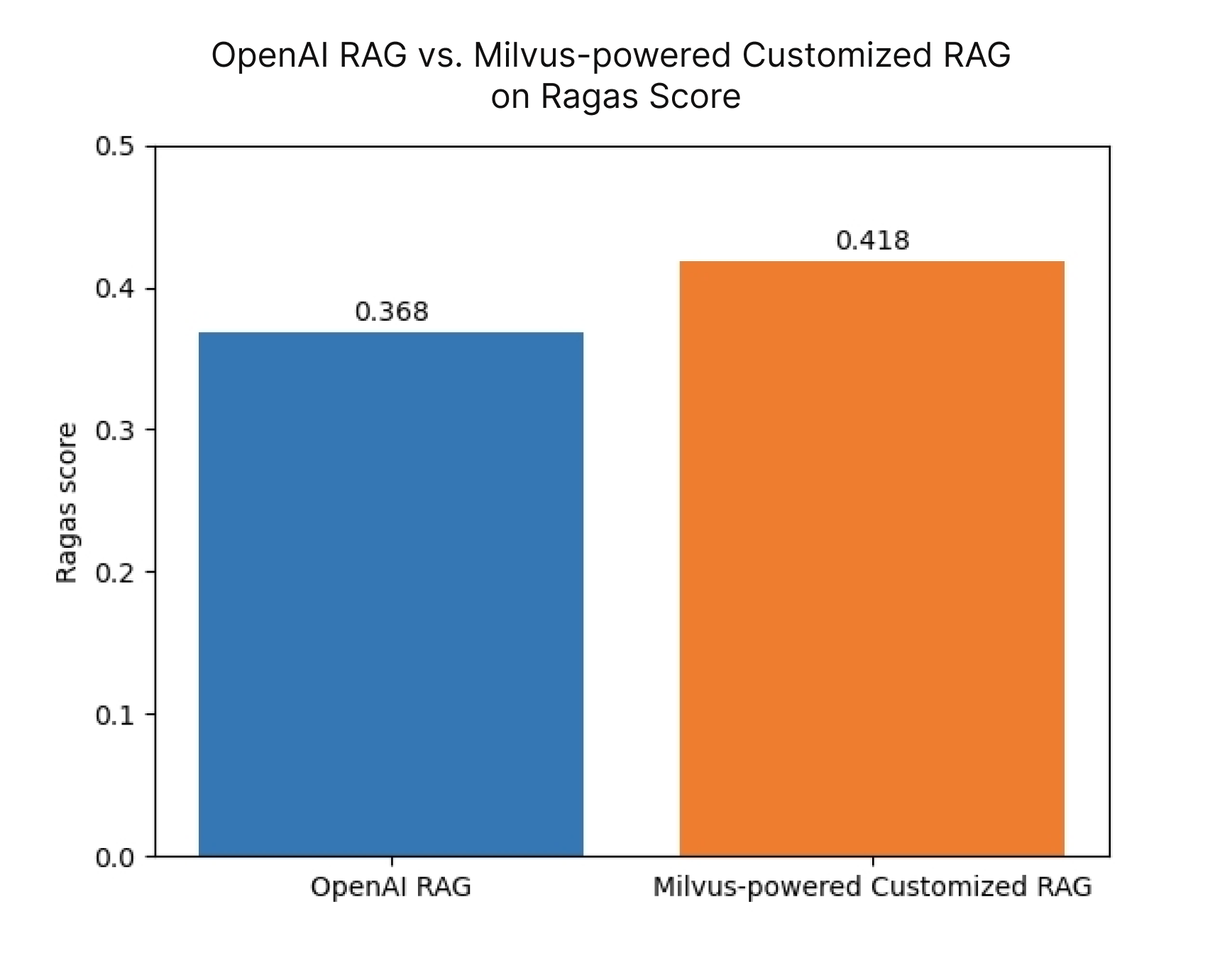

Ragas also enables us to compare two RAG systems using Ragas Scores, an average value generated by calculating the harmonic mean of various metrics. The harmonic mean is used to penalize low-scoring items. The higher the Ragas scores, the better the overall performance of an RAG system. The chart below shows the Ragas scores of two RAG systems.

As is shown in the chart, the Milvus-powered customized RAG system has higher Ragas Scores than the OpenAI RAG system.

In conclusion, the Milvus-powered customized RAG system has outperformed the OpenAI RAG system regarding Ragas Scores and other crucial metrics, including context precision, faithfulness, answer relevancy and correctness.

Why Does the customized RAG outperform OpenAI’s built-in RAG?

Through many individual comparisons, we found that the customized RAG pipeline based on a vector database performs better than the OpenAI Assistants-based RAG system. Why? Here are some of the most important reasons.

OpenAI Assistants rely on internal pre-training knowledge rather than external knowledge.

Due to their internal agent setup, OpenAI assistants rely more on pre-training than uploaded knowledge. For example, when asked about personal finance classes in high school, the OpenAI RAG system provided an answer that relied on something other than the ground truth.

| Question | Are personal finance/money management classes taught in high school, anywhere? |

|---|---|

| OpenAI RAG system’s Answer | Personal finance and money management classes are indeed taught in some high schools, although the availability and requirement of such classes can vary greatly depending on the country and even within regions of a country. In the United States, for example, the Council for Economic Education (CEE) conducts surveys and reports on financial education in K-12 schools. Their findings suggest that as of my last update, a growing number of states have integrated personal finance into their educational standards, and some have mandated that students take a personal finance course to graduate. Although I don't have access to a live database or the most recent studies, you can often find information about financial education in schools by: 1. Checking government education websites. 2. Looking at reports from financial education nonprofits and councils. 3. Contacting local school boards or departments of education. If you are interested in specific locations or have a particular focus in mind, you could specify that so I could provide more direct information, if available. |

| Customized RAG system’s Answer | Personal finance and money management classes are indeed taught in some high schools, but the availability and requirements of such classes can vary greatly. For example, in Houston, Texas, a private high school offered an optional half-semester class in personal finance that did not provide credits toward graduation. Staples High School in Westport, Connecticut, introduced a personal financial management class focused on using mathematics to develop financial literacy skills, covering topics such as earnings, banking, credit cards, loans, taxes, insurance, investing, budgeting, and buying personal property. Public schools in Ann Arbor, Michigan, offered a 'civics' class that included basic money management topics. Additionally, in the UK, the 'Every Child Matters' framework includes 'achieving economic well-being' as an important element, and some schools use a School Rewards System to teach kids about finance and the economy. While these examples show that such classes exist, they are not universally required or standardized, and the depth and focus of the curriculum can vary. |

| Ground truth in the dataset | In Houston, Texas USA where I went to a private high school they had a half-semester class in personal finance, but it was optional and didn't give you any credits towards graduation. You are right though, it should be a standard class. After all, who doesn't need that information in their adult lives, and not everyone goes to college." ... We had a "civics" class when I was a freshman in high school. This was in the Ann Arbor, MI public schools. It covered the very basics (how to balance your checkbook, what are stocks, how do income taxes work, what is interest, etc.) of money management along with an overview of politics and the legal system. It was a really light class, though, and didn't go deeply into personal finance and money management. I agree that such a class would be very valuable, as would cooking, nutrition, and basic home and car repair. |

While the OpenAI RAG system's response is not wrong, it lacks specificity and its acknowledgment of not accessing the latest studies suggests an incomplete utilization of the uploaded document's knowledge. In contrast, the Customized RAG system provides a more accurate answer by seamlessly integrating specific information from the ground truth. This comparison showcases customized RAG's superior ability to effectively leverage external data, distinguishing it as a more reliable and proficient solution.

Customized RAG is better in document segmentation and data retrieval than the OpenAI RAG

OpenAI's knowledge segmentation and recall strategy might need optimization. Sometimes, the retrieved snippet is inadequate, such as providing only 'PROS: CONS' for a question about IRA investments. In contrast, the Customized RAG Pipeline excels in recalling relevant snippets, offering a more comprehensive response.

| Question | Pros/cons of being more involved with IRA investments |

|---|---|

| Snippet retrieved by OpenAI RAG | ['PROS: CONS'] |

| Snippet retrieved by Customized RAG | ['in the tax rate, there's also a significant difference in the amount being taxed. Thus, withdrawing from IRA is generally not a good idea, and you will never be better off with withdrawing from IRA than with cashing out taxable investments (from tax perspective). That's by design."' "Sounds like a bad idea. The IRA is built on the power of compounding. Removing contributions will hurt your retirement savings, and you will never be able to make that up. Instead, consider tax-free investments. State bonds, Federal bonds, municipal bonds, etc. For example, I invest in California muni bonds fund which gives me ~3-4% annual dividend income - completely tax free. In addition - there's capital appreciation of your fund holdings. There are risks, of course, for example rate changes will affect yields and capital appreciation, so consult with someone knowledgeable in this area (or ask another question here, for the basics). This will give you the same result as you're expecting from your Roth IRA trick, without damaging your retirement savings potential." "In addition to George Marian's excellent advice, I'll add that if you're hitting the limits on IRA contributions, then you'd go back to your 401(k). So, put enough into your 401(k) to get the match, then max out IRA contributions to give you access to more and better investment options, then go back to your 401(k) until you top that out as well, assuming you have that much available to invest for retirement." "While tax deferral is a nice feature, the 401k is not the Holy Grail. I've seen plenty of 401k's where the investment options are horrible: sub-par performance, high fees, limited options. That's great that you've maxed out your Roth IRA. I commend you for that. As long as the investment options in your 401k are good, then I would stick with it." "retirement plans which offer them good cheap index funds. These people probably don't need to worry quite as much. Finally, having two accounts is more complicated. Please contact someone who knows more about taxes than I am to figure out what limitations apply for contributing to both IRAs and 401(k)s in the same year."] |

OpenAI Assistants retrieved a false snippet. In contrast, the Customized RAG retrieved more relevant snippets, offering a more comprehensive response that aligns with the nuances of the question.

Other reasons

OpenAI Assistants does not allow users to adjust parameters in the RAG pipeline for customization or optimization. However, with customized RAG, users can have complete flexibility for adjustment and optimization.

OpenAI Assistants has file storage limitations, whereas the Milvus-powered customized RAG can scale out quickly without an upper limit, making it a better option for users who require greater storage capacity.

Conclusion

In conclusion, our comprehensive comparison and analysis using the Ragas evaluation tool highlight the strengths and weaknesses of the OpenAI RAG and a Customized RAG based on a vector database like Milvus. While OpenAI's RAG performs well in retrieval, the Customized RAG excels in answer quality and relevancy, recall performance, and many other aspects. Developers seeking powerful and effective RAG applications will find the flexibility and capabilities of a vector database-based RAG solution preferable for achieving better results.

- What is Retrieval Augmented Generation (RAG)?

- RAG evaluation tool: Ragas

- RAG evaluation dataset: FiQA

- Setting up the RAG systems

- Evaluation results and analysis

- Why Does the customized RAG outperform OpenAI’s built-in RAG?

- Conclusion

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Zilliz Cloud Introduces Advanced BYOC-I Solution for Ultimate Enterprise Data Sovereignty

Explore Zilliz Cloud BYOC-I, the solution that balances AI innovation with data control, enabling secure deployments in finance, healthcare, and education sectors.

3 Key Patterns to Building Multimodal RAG: A Comprehensive Guide

These multimodal RAG patterns include grounding all modalities into a primary modality, embedding them into a unified vector space, or employing hybrid retrieval with raw data access.

Mixture-of-Agents (MoA): How Collective Intelligence Elevates LLM Performance

Mixture-of-Agents (MoA) is a framework where multiple specialized LLMs, or "agents," collaborate to solve tasks by leveraging their unique strengths.