- Events

Challenges in Structured Document Data Extraction at Scale with LLMs

Webinar

Challenges in Structured Document Data Extraction at Scale with LLMs

Join the Webinar

Loading...

About this Webinar

All businesses have to deal with unstructured documents at some level. Some have to deal with them at scale. While an LLM-powered approach to this problem is most certainly head and shoulders above traditional machine learning-based approaches, it is not without its challenges. Top concerns being accuracy and cost, which can really begin to hurt at scale.

In this webinar, we will look at how Unstract, an open source platform purpose-built for structured document data extraction, solves these challenges. Dealing with 5M+ pages of structured content extraction per month, Unstract uses various techniques to attain accuracy and cost efficiency, chief among them—the use of vector databases.

Topics Covered

- Introduction to Unstructured Data Processing

- Processing Document Data

- Extraction Difficulties

- Utilizing Milvus

- Demo

I'm very pleased to introduce today's session. Like I mentioned, challenges and structured document data. And that is very specific and importantbecause despite us being in the world of unstructured data,a lot of it actually is structured and pretty complex. When you look at, you know, PDFsand a lot of documents that are forms or legalor have to be in a specific format, if you've ever triedto extract that, that is not easy. So, we're very lucky to have our guest speaker here.

He is the co-founderand CEO of Unst extract, an open source, uh,startup building, a cool LLM powered platformthat extracts data from unstructured documents,and again, really structured documents helpingautomate critical business processes. Uh, 'cause this is really complex. Before he, uh, co-founded Unstr was the VP forplatforms engineering at FreshWorks,also an electronic hobbyist. Oh, I should have, uh, showed you some new, uh,circuits I got today. They're sitting on my desk here.

Uh, and, uh, you no longer voluntary correct spellingand grammar mistakes committed by others. Wow, that's a tough one. I, I applaud you for that. Welcome, uh, let's get started. Share your slides and I will hide in the background.

Thank you, uh, Tim, uh, really appreciate it. Um, let me share my screen and then, uh, you go from that. Um, so a quick, uh, background on, you know, what, uh,you know, we'll be looking at, uh, looking at today, right?So I have like a little agenda slidethat we can, uh, quickly go through. So when it comes to, you know, PDF documents,which are structured unstructured,it doesn't really matter, right?So what happens is that there arebusinesses out there that deal with these kindof unstructured documents almost on a daily basis, right?And so, but what happens is that there's,there's current technology available to structure those,those PDF documents or even other formats. It doesn't really matter.

Could be Word document,could be a PowerPoint, could be Excel sheet,doesn't really matter, but there are limitationswith those current systems, right?So usually you'll, the, the largest limitation out there isyou'll have to manually annotate the documentbefore you can start extraction,which is a huge problem, right?Uh, think of, for example, a, a business dealing with, uh,let's say, uh, giving out loans. And, uh, what happens is that, let's sayto figure out if you, the kind of eligibility that you have,let's say you need to submit a bank statement, right?And that that business, uh, financial organization needsto accept bank statements. What happens if they get 300 different applicationsand those 300 different applicationssubmit bank statements from 300 different banks?Is it okay to go manually annotate all those300 bank statements?I mean, it's just not possible, right?So that's the limitation of the current system. But here's the, here's the really cool thing, right?Large language models can help overcome, overcome that, uh,need for manual annotation, right?So unst instruct is, uh, is an open source platformthat helps with that. And we will see, uh, you know, how it works,but also, right, it, it would be unfair to say that, hey,this is like, uh, a panacea, right?So large language models do have their limitations,and then we'll see what those limitations are, right?Uh, I will introduce the abstract platform.

Uh, we'll spend a,we'll spend maybe 10 minutes quickly going through, uh,what it is and how it works and all of that. And then I want to come to the, the meat of the talk, uh,where we'll spend most of our time today, right?That's where the marriage between large language modelsand, uh, uh, you know, vector databases comes in. Uh, in, in this particular case, I'll be using malware, uh,as a managed instance on the Zillow cloud,uh, which is an awesome service. I, in fact, I signed upand I got like a hundred dollars, uh, credit,which is awesome, right?So I'm, I'm hoping Tim will give me more,but I do have a hundred dollars to begin with, which, which,which makes me feel pretty rich. Um, so, but, but we will get into the weeds herebecause I want to show you what happens when you are tryingto extract data from unstructured documentswithout a vector database.

Then we will introduce a vector databaseand we'll see how that goes. But then we will, we'll go into a little bit more detailand differentiate what happens when you use asimple retrieval strategy. And then we look at a slightly more sophisticated strategy,which we call sub-question retrieval. And if you, if you don't fully understand all of this, I,I will, uh, take time to walk, walk you through some of, uh,how this works and, and stuff like that. For some of you, it may, it may be, uh, thingsthat you've already heard, but, you know, for the restof the, for the, for the rest of the audience,I hope you'll be patient asI explain some of this stuff, right?But more importantly, I want to talk from, uh, an angleof practicality, right?So look, we, we deal with around, you know, um,5 million pages a month at this point of time as a platform,that's what we help extract, right?So we have a, a lot of data, we've seen a lotof quantum cases, uh,we've seen large language models deployed, uh,at scale in production, right?Right? So, would love to give you some of those insights,uh, as to what works well,what doesn't work well, and things like that.

It, it, this is a very interesting, uh, uh, application,a very practical application of large language modelsthat you can monetize today, right?Um, this is about me. I I don't wanna spend time here. We have a very packed agenda today, so we will get going,but, but I mean, you know whereto find me in case you wanna, uh, you know, chat up evenafter the, this webinar, right?So there are a lot of challenges in dealing with, with,with PDFs in general. So you have tables, sometimes you have PDF forms. Now these forms can have check boxes and radio buttons,and they can have multi column layouts,and then you can have scan documents as well.

Sometimes scan documents are properly scanned,meaning someone's user flat bit scanner,they put in a document there, they scanned it. Those scans are usually not a problem. You can deal with them pretty easily,but then, hey, you know,after, of course now that people have smartphonesand they just whip them out,and then they click a photo of a receiptor what have you, right?They, they could pretty much, uh, you know, do anything, uh,you know, with their smartphone, click,click a photo off a form, uh, document,a receipt or, or anything. But the problem is that there could be skew, you know, uh,lighting problems, all sorts of thingsthat we will still have to deal with. And the worst of the worst are handwritten scannedforms, right?Which are, which are also super difficult to deal with.

These are some of the things that we deal with on,on a, on a day-to-day basis. We, we need to pull structured information from allof these types of documents right now in, in the real world,within the same document type, right?So a bank statement is a document type, a resume is a,is a document type, and insurance policy is a document type. Although we say a type,you will find a wide ranging varietybetween these document types,and that's where the challenge comes in. That's where large language models come in. Large language models are way better than machine learningmodels when it comes to this.

And they can do a really, really good job, right?Like how a human would do, like for example,if I gave you two bank statements from two different banks,you are not looking for, oh, in this location, 50,a hundred x and y, I will find the customer name,then I'll find the customer address at this location. You're not thinking like that as humans. We, we just think of, think in terms of language, right?When we read a bank statement,we just read a bank statement,we know what's the customer name,we know what's her address, we know what, where the,the transaction table starts, right?We just know it. Large language modelspretty much follow this. I mean, the kind of very similar in,in the way they approach, uh, you, you know,extracting structured data outof unstructured documents, which is awesome, right?Very human-like, right?Um, and, and the other problem, you know,the impact could be that, hey, you know, when,when people manually extract data, it's super slow,super expensive, very a prone as well right now,but we, we have a very interesting opportunity here, right?As people who work with large language models,let's say this little, uh, oval here, uh,represents all the problems that are solvedby current incumbent technology, right?There is a huge, huge unsolved market out there,which we can solve using large language models, right?Very, very easily, right?But there's also more that can be solved,which is not solved currently by large language models.

So this is what, this is what I was talking about. Hey, what are the limitationsof large language models, right?So we have customers ask us, oh, we have bar chartsand these graphs and charts and, uh, pie chartsand all, all sorts of stuff in, in financial statements. Can you also, you know, turn that into structured data?The answer is that the state of the art is not there yet. So even if you take a, a very simple pie chartor a, you know, pie chart, a lotof times if you take a simple screenshot of that,paste it into, uh, let's say even chat GPT,and ask it to turn that into structured data, you will findthat it makes a lot of mistakes a lot of times. And if you do this enough, you'll know that it's,the success rate is just not there for youto put it into production, right?So, um, but, but still, right?What we can solve using last language modelsis a humongous market.

And with, and, and this market is currently being served ahundred percent manually, and it's slow, expensive,and apro, right?So that's something that we can solve using lms, right?So, um, think about, take a step back, right?Think about how are people making moneytoday with large language models?Let, let's think about that for just a moment. Right now people talk about, you know, insane valuationsthat startups are getting,and people talk about solving, boiling the oceanwith large language models. But if you really think about wherelarge language models are being deployed practicallyand solving real problems,and, you know, the companiesbehind those solutions are making money, there are very,very few and far in between, right?We'll walk through some of thembecause, hey, as practitioners,this is still very interesting information practitioners,and I'm sure there are leaders as well, right?Now, I, I think that the number one use casefor large language models, which is monetizable today,is the enterprise search, right?Where you use large language modelsand vector databases to, you know, get data inand then do search, there's securityinvolved, all sorts of stuff, really. But then this is a very interesting use case,and the number two use case is customer service, right?So typically, uh, you know, you know what you could dowith a human, uh, there's a knowledge base,people are trained on, uh, you know,a company product information,and then other humans either call them or chat with them or,or some kind of a chat box. And then those folks answering this, this is like super ripefor, uh, disruption by large language models.

And we, we, we see that this is already happening, right?And chat bots justafter large language models, they just became so good that,you know, it is hard to differentiate, uh, you know,whether we are talking to a, a human or a chat bott, right?And I, I think the number three largest horizontal use casesin software engineering, especially with, uh, with code, uh,uh, you know, copilots, right?So now GitHub, copilot code dm,tab nine, you have a bunch of them. They're doing a really, really good job. I use them on a daily basis. So do my colleagues and,and a lot of my friends in, in,in many different organizationswho are into software development. Now, this is, this is real, uh, impact, right?And a lot of boilerplate code, honestly,nobody really likes right?Rights, uh, when they use this,this kind of, uh, stuff, right?And we believethat the number four horizontally monetizable large languagemodel use case is really structuring unstructured data fromthese structured and unstructured documents.

Right? Now, of course, there's other interesting companiesthat, that, that are doing the same for salesand marketing and, and things like that. But this is where I think with LLMsorganizations have solutions that make money todayand solve real problems. Right? Now, of course, talking about agents,they, I think they're out in the future, uh, right?Because of the compounding error rates, right?If, if you take input from LLM feed to one of theanother, uh, you know, another use case,and then that to another use case, errors tend to c on,and this is a big problem for agentsand people are trying to solve this,but I'm sure that they will solve it,but right now, I don't see any, uh,monetization happening around that right?Now. Look, here's the thing, right?There's a difference between copilotsand un instruct, right?There's nothing stopping you from uploading a documentto chat GPT and asking it to structure it. There's a couple of problems there.

What is the structure?It'll come up with work every time you ask,it'll give you a different structure, and then you have ahuman in the loop, meaningthat the information is now on a screen which a human isviewing, but the data is probably requiredsomewhere else, right?In a, in a system where now, for example, if a,if, if some kind of a, uh, you know, bank is using,is getting a bunch of documents from you, uh, sothat they can give you a loan, they needthat information in their backend system sothat they can process your application. Right? Now, you can't have a human, of course, you know,you can, you can have a human copyand paste some data from a copilot,but first is they have to verifywhether data data is correct. There is no hallucination, right?And then while copyingand pasting, they can copy the wrong field overand they can make a mistake there. And also the other, uh, problem is that they may have totransform some data, change the format of a dateof a currency or whatever,and mistakes can happen there, right?So definitely a step up from doing ita hundred percent manually. But then again, you know, uh, not the best, uh,possible use use of this technology, right?With untraced, what you are looking at is what we callas machine to machine automation, where we take, uh,unstructured, uh, documents, structure them,and, uh, push them into where it's actually required.

We, you can either do eight ETL pipelinesor you can do APIs, uh,to get the structured data intothe system where it's required. Now, of course, there's the problem of hallucinationand all that, but we'll talkabout how we deal with that, right?We feel this is more, uh, complete automation right now. There's three different generations of, uh, uh,the way you structure data from variousunstructured documents. We have OCR, which is just, Hey, you know, this,this pure digitization. There is no intelligence there.

Then you have machine learning based models,which you should use computer visionand a post of other techniques to, uh, read documents,NLP based, NER, those kind of things. But the problem is they're definitely better than OCR R,but they're pretty brittle, right?And, and, uh, the current systems that operate at scale,they need annotations for, for this kind of systemto really work well, right?But like I said, the way humans handle documents is justusing knowledge, just using language. We just read a document and we figure out what is what. And then you can do,you can emulate the same using last language models. And that is what, uh, systems like UN instruct, uh,leverage the ability for large language modelto reason the ability, uh, that they haveto follow instructions, which is our prompts using,which we know we can structure unstructured documents.

Right now, un instruct itself is, uh,is an open source platform, uh,and you can bring your own LLM stack, uh, right?So you choose your, a large language model,you choose your vector database, em embedding model,text extraction system. It's really up to you because we want you to be in control. Uh, right? For example, there could be some use caseswhere you want the best of the best model. Some use cases where there's anywhere human in the loop,you want to just speed it up. So you can use a cheaper model, a less, uh, capable model.

It, it really depends on the viability of the use caseand then, and what you have to choose. Uh, of course, we, we support, uh,we've been supporting malware I think very, very early on,and, and ZI is cloud as well. Uh, pretty much the, the open source offering works,the cloud offering works is pretty well tested with,with and Zills right?Now, think of OnTrack as twoseparate distinct phases. Phase one is whereyou provide a representative sample intowhat we call as prompt studio. So you go to pro studio, you give, uh, example.

So let's take the, the, the,the bank statement example, right?Give it statements from different banks, 15,20 different statements. Then you do the prompt engineering. And prompt studio will help you to write generic prompts,to extract key fields from those documents, nameof the customer, address of the customer account number,the transaction, uh, information, all of that stuff, right?So you, you do the generic prompt engineering, that's one. Once you are happy with the prompt engineering,you can then you come to them through the deployment phasewhere you launch it as an ETL pipelineor an API endpoint, right?So you, like, let's say you, you launch itas an API endpoint, you send a bank statement,you get structured JSO data back, right?That's the, that's the general idea. So, you know, you, you have to rememberthat these are two distinct phases right?Now.

We, we spoke about hallucinations, right?And we were a little bit worried about hallucinations. This is where LLM challenge comes in. Uh, unstuck users, two large language models,and both of them have to come to a consensus. Otherwise, we will fill up the valueof a particular field being extracted to null, right?Because we believe that a null valueis better than a wrong value. That's a philosophy, right?We, so we, we kind of, uh, stick to that.

Uh, that's where Elam challenge comes in. So there is no hallucination possiblebecause two different large language models from twodifferent separate vendors, they cannot hallucinate the samevalue and agree on it. That never happens, right?So that's why that there's, you don't haveto worry about hallucinations in abstract right?Now, as far as cost is concerned,we have two other technologies called single pass extractionand summarize extraction, which reduce, which again,use a large language model to compress your prompt,compress the document, uh, reduce, uh,both latency and, and cost. So this is in, in general, uh, you know, these are someof the features that we have to, uh, help, uh, you,you know, uh, practically deploy large languagemodels in production, right?Uh, typically you get upto seven x savings when you turn on these features. Uh, that's how these work right?Now, moving on, uh, like I said, let's move on to the meatof the discussion and how, uh, things work.

I will give you a quick overview of the, uh,the unst platform,and we will, we will take one particular use caseand we will see how that works,and then we'll deploy, we'll have that deployed as an API,and then we'll give it a document,and then we'll see what kind of response we get. And then, you know, we, we'll take it from there. And, and then we move on tothe different strategies you can use with,uh, vector databases. In this case, uh, we, we'll use zills Cloud,and we'll see what the impact is on, uh, you know,structuring these documents using, uh, Zillow, right?Um, so this is the, the platform. So here we have a prompt studio.

We have a, um, bunch of, uh,pro studio projects here. If this will load, lemme refresh this. So what we want to do isthat I wanna show you like a quick tour of, uh, a pro studioand, and, uh, how, where, where the various components are,how things work, and then what we'll do is that, uh,we will go through, uh, one particular use case, especially,I wanna show you the, the API and, and how it works,and then we will take it from there. It's taking a lot of time. Let me go, I have another instance.

Let me check this one out. Okay. This loaddemos, right?Okay, this is taking time. That's okay. Let's move on.

I'll show you some of the things that we'll do. Okay?Now, what we want to do is basically extractstructured information from some documents. Now, in this particular case, I have, uh,two large language models, uh, configured. I have a, uh, 10 Q form, uh, from Apple. These are just, uh, uh, you know, standard, uh, forms, uh,that public companies five, the a CC every quarter,and they have a huge bunch of information.

But in general, we are interested in, uh,I think we are trying to extract 10 different,uh, items, right?So the name of the registrant, uh, I have the JSON field. So ultimately, right, I want to convert this document into,uh, this format of JSON, where we have the registered name,the, uh, the commission file number, jurisdiction, state,state employee id, the address, uh, telephone number,security details, and, and also the risks and risk factors,and the income details for this quarterand the same quarter last year, right?Um, this is what we, we are trying to do, right?So in, in this particular case, uh,I have two large language models configured. Uh, one is, uh, GPT-4 turbo,and the other one is clawed instant 1. 2. And, uh, for each of the prompts, you can see the costis actually pretty high, uh, right?The reason is that this document is actually convertedto this raw view, uh, right?Uh, as you can see, we, we call this, uh,layout preserving technology, which is our, uh,text extractor, LLM.

And when you preserve the layout, it turns outthat large language models arereally good at reading this kind of stuff, right?So rather than just a text dump,when you preserve the layout,we quite literally insert text, uh, to be able to,to be able to do that, right?So, um, so in this particular case, uh, you know,we are not using a vector database. So what is happening is thatevery time we are trying to extract, uh, something, uh,the whole context, this whole context is sentto a, a large language model. That's the reason why the cost is very high,and the, the tokens are very high as well, right?Uh, you can see the same for the file number jurisdiction,state, this, these are all text fieldsthat we're extracting, and you can compare the differentextractions by the,the two different large language models we have. Then we are getting the address here. Uh, so we are getting the, the address, the state,and the zip code, uh, separately, the city as well.

And we can also look at the, um, uh,the different, uh, I mean, you can comparebetween these two large language model extractions right?Now, um, when it comes tomore complex types, we have A-J-S-O-N field. So there's various types of fields, your text number,email, BOO, and data, and all that. Now, when you set this to JSON,and when you, you can, you can, in the prompt,you simply describe the JSON, you want,we do the extra prompt engineering required to, uh,to basically, uh, you know, ensurethat you do get JSON output, right?And then we have the disclosures here. Then the income details. This is a slightly more complex prompt, right?So we are saying that for the current quarter,get me the name of the quarter, the net sales, net income,and then we have some extra instructions here as well.

And, uh, turns out that, uh, you know, VUS is really,really good at this, right?So even though you have a complex prompt, uh,I can actually look at, uh,the chunks used here, uh, right?And, and, and I can, I can see what kind of, uh,data is being returned from the Vector database. But in this particular example, we are not using aVector database, right?For some reason, Claude is, is failing,but, uh, GPT is giving a response,and I have verified that this is, this is indeed the,the correct response, right?And then we have risk factors and things like that. Right?Now, let's look at, uh, uh, uh,let's basically deploy this as an API. Uh, so what you can do in instructors that, excuse me. So you can export this as a tool,and you can then deploy this as an API.

So if you go to APIs here, I have, uh, one endpointper prompt studio project, right?So let's take, for example, uh, the projectthat we were just looking at, right?Where we are not using a vector database, we are simply, uh,you know, sending the whole document for every promptto every prompt for every prompt extraction,every field extraction, right?So this is what we are doing. Now, I have this loaded, uh,in, uh, you know, my, uh, postman here. So if you go to, uh, postman Collection, so in fact,what you can do is that for every API,you can simply download a Postman collectionto call this API. And of course, you can manage the keys. You can also see the code snippets to, to call this API.

So to remind you, again, uh, this particular API endpoint,uh, does not use a vector database. So we'll see what the cost is for such an extraction. That's the general idea, right?So if I go back to Postman, uh, I've loaded this in,in Postman, this particular, the No Vector db,uh, endpoint, right?And I quite literally send one, uh,the latest Apple, uh, 10 Q report for, uh,quarter two of this year, and I will send it over to the APIand I should get, uh, structured, uh, JSON data back, right?That's the general idea. Right?Now, I've turned on, uh, metadata,meaning it'll return the chunks as well, uh, which were sentto the large language model for extraction, right?So you can see, uh, that the chunks are there. I'm gonna skip this whole section down and come down, right?So now we send the document overand got structured data back, right?So we have the output here, we got the address,we got the file number, the disclosures, the income detailsfor the, you know, uh, June ending quarter this year,and then last year as well.

And, uh, the other, uh, details like, uh, risk factors,security details and all that, right?But very importantly, what I want to draw your attention tois that since we did not use, uh,millware in this particular example,our cost is through the roof. For a simple extraction like this, we have blownover a quarter million tokens, right?Because we use the whole contextwithout using a vector database. And then, uh, we have like 7 99 output tokens as well. Uh, but then, like I said, we've blown close to $3in this particular, for this particular use case, right?Of course, we also spend some on embedding,but the embedding cost is not, uh, not very high, uh, right?But this is, this is a lot, right?And, but luckily, right, we can use, uh,the Zillow cloud in this particular, uh, case and,and we can see how we can quite simply reducethe cost of, of this. Right? Now, let me go back to abstract, right?And look at, uh, some of the projects I have, right?And we look at the same example, which we looked at earlier,but with the, you know, ZILLS enabled, right?If you look at the settings for this particular project,I've configured, uh, GPT-4 as, as the,as the default extractor.

And then I also have Cloud instant 1. 2as a secondary extractor just to compare,uh, them side by side. I'm using the Azure Open AI add for the embedding,and, uh, I'm using VIS on Zillow's cloud, uh,for the Vector database, right?And I'm using LLand Whisperer in text mode as the text extractor right now. The text extractor basically converts this document intothis kind of a view, uh, rightwhere we are preserving the format,and then tables are, you know, beautifully preserved, which,which is super important for a large language models. Uh, right? And there we go.

We have the exact same things again, right?So 10 different prompts starting with ed name, uh, file,file ID, and all that, right?So we have all of this, and we can even, uh,in this particular case,I think I've also enabled a challenger LLM challengeusing Gemini Pro. So it's challenging the extraction,and you can see that here, right?Uh, this is the input,and then you can see that the input is very smallbecause now, uh, vector database and all,and you can see how the price can really drop now, right?So, uh, it's a, it's a, it's, it's very small context now. So there's the extraction, and then there's a challenge. We generate a score for each of the extractions, city,state, zip code, full address, everything, got five outof five, which is awesome. We allow perfect grades.

And then, uh, yeah,the result is finally set to this, right?So that is LLM challenge in action. Um, now you can seethat the cost has dramatically come down. Of course, you can also see that fraud is a lot cheaper thanGPT-4, uh,and you can see that from like 87,000,uh, tokens. Now, the tokens have dropped to mere thousands, right?How is this happening? This is happeningbecause we have turned on, uh,the vector database in this particular case. Let me go through some of the, uh, details here.

Uh, let me edit this. So we have a chunk size of 1,024. We have a overlap of 1 28,and we are using a simple retrieval strategy. In this particular example, I wanna go throughwhat simple is versus the other thing in just a bit. I'll, I'll revert to the pre presentation to walk youthrough this right now.

Um, if you look at this here, we, you can click on this,and also you can also look at the, uh, the chunks used. Right now in this particular case, we have asked for, uh,if you look at the prompt, what is the addressof the registrant form a J object with the following fields,full address, city, state zip code. And then this is first sent to the Vector databasewhere the whole document, uh, raw text lives. This is like the full raw text,but the full raw text is not used in the extraction. Uh, this, this question, the prompt is first sentto the vector database in, in this case, to Zillow cloud,and then Zillow cloud response back with, uh, these chunks.

And if you look at this here,this is the exact chunk we needbecause, uh, you know, it, it has the address forthe principal office and all that. So it's now Zills has figured out, hey,this is the chunk which is relevant,and then I'm sure there's other chunkswhere there's addresses present and things like that,because this is how the system actually works. And, and, and basically, uh, is looking for, you know, uh,addresses basically from, from, uh, from the wholedocument getting relevant chunks. But, uh, you can see how tremendously, uh, you know, the,the cost has now dropped, right?Um, now let's do one thing. I have this, this one,the Simple Retrieval also launched as an API.

You can see this one here, right?This 10 Q parsa, simple retrieval. I'm going to go to Postman here. Let me close this now, or let me keep this openbecause I wanna, uh, compareand contrast with this, this cost here, right?So let me open this simple retrieval, uh,endpoint, right?And I will send over the same file, the Apple 10 Q,send it to this API, which is now using, uh, vis. And there you go. This time, if you see,the chunks are also very small, right?The whole document is not present, right?Because I've enabled metadata.

You see all this. Otherwise, generally, the a PA doesn't respondwith all this right now coming to the cost,what a huge difference, right?The cost has gone down from$2. 86 to just,uh, 36 cents, right?I think that's like a eight x reduction in costs, right?That's what you do. That's what you getby just a turning on, uh, you know, uh, uh,the Vector database, uh, and,and using even something like a simple, right?But let me go back and,and talk a little bit about, uh, you know, retrievaland especially simple retrieval. And let's, let's, let's go throughhow this whole thing works.

Now, I know that some of you may be advanced users here,but you will have to bear with me for the, you know, restof, rest of the audience among us who may not be familiarwith this, who may be new to Vector databases, right?So bear with me here. Uh, in general, what happens is that we take a queryor a prompt, in this case, let's say we are tryingto extract the address. You saw that prompt, it was a pretty simple prompt. We send it to an embedding model,and then we have already the document stored in,in the vector database. Then we ask the vector database,given this particular prompt,what are the relevant chunks, right?And those chunks are retrieved, uh,and then combined with the, with the user prompt sentto a large language model,and we get the response from the large language model.

This is what RAG actually is, right?So, but this is very simplistic, right?This is what we call as a simple retrieval, meaning thatwe simply take the queryand send it to, uh, you know, uh, the vector database alongwith the, and get the, and get the, get the chunks,and then, uh, use it. Now, the, the very weak point here that you have, you,you have to understand that vector databases, right?They have strengths and weaknesses. If you send the Vector database a very, very complex promptand hope that it'll figure out, uh, you know, uh,the right chunks to send you, then that's,that's a huge problem, right?So we look at a example of a promptthat shouldn't be sent directly to a vector database, right?Vector databases are specialized inarranging information, in retrieving information,arranging in information in a vector space,and then retrieving the right information that you need. That's, that's what vector databases, uh, they,that's their strength, right?We shouldn't expect them to, uh,give you like the relevant chunksby just giving them a very complicated prompt, right?That's not the right way to approach the prompt, right?We look at how, how, how, and why, why that is right now. So if you look at like the simple, uh,uh, retrieval strategy that we just use, meaning thatwe take the prompt and directly send itafter embedding to, uh, a vector device, ask for 11 chunks,there are some advantages and disadvantage of that, right?So simple retrieval strategy is pretty much like RAG, what,whatever the slide that we just went through right now,it works well when the prompt is simple,there's a direct correlation between your promptand what you want to extract.

Like, there is no lot of information about howto format what to avoid. So prompts can be really long, right?But what you need to extract can,can just be somewhere in the prompt, uh, you know,and that, that's usually a problem, right?For simple prompts, like we had this,this works really well right now, uh, for simple,uh, uh, retrieval strategy, right?For simple prompts, you should be able to get the,get the right chunks, right?Uh, your mileage may vary,but usually you should beable to get the right chunks right?Now, th this will be a cheaper approach, uh, right?Because what you're doing is thatyou're taking the question, embedding it, sending itto the vector database, you're getting relevant chunks,sending it to the large language model,and you're pretty much done right?Now, what happens when the prompt itselfis very complex, right?We, we look at some of those, uh, some of those, uh,ca cases, and then come back to the, to the presentation. Right? Now,I have another project which has, uh,subquestion retrieval enabled. I'll show you where that is done,and I'll also show you what a subquestion retrieval isand how, how that might work. So if you go to settings here,and this time you, you say edit.

Now you can see that the retrieval strategy,I've changed it from simple to sub-question, right?So that's all you you need to do in ract. Now, with sub-question retrieval, what basically happens isthat we use a large language model to take this promptand convert this to a bunch of sub-questions as to what toretrieve, right?So let's say you send it a, a large prompt like this, right?We are not send, we are not sending the,the vector database, the, uh, the, the, the single prompt. But then what we are doing isthat we are sending this prompt to a large language modeland asking it what should be extracted?Give me, give that to me as separate questions. Then we have a loop where in that loop,we send all the sub-questions generated by the LLM,from the prompt and asking the, the vector databaseto give us the relevant chunks. Then once we have all the chunks, we're lookingfor duplicate chunks, we are removing them,then we use those chunks, uh, send itto a large language model along with the question,and hopefully we'll have better results.

Right? Now, this, this take for example, you know,this particular prompt,it's slightly more complicated than a simple prompt, likewhat is the address of the registrant?It's not as simple. So here we have from the cons,condensed, consolidated statement of operationsor income statements, respond with the following JSO object. And these are all the things that we're asking,and we have further instructions here right Now,if you really think about this, if you send, you know,these instructions to a vector database,it's not going to give you any relevant fields, right?We simply need, the relevant fields are for usconsolidated statements of operationsor income statements, thatthat's all we are interested in, right?So we are not really interested in, uh, you know,this portion here. So subquestion retrieval actually works better in this casebecause when we send this prompt to a large language modeland ask it, Hey, what is the user trying toextract, right?And then the large language model is probably goingto respond with two questions. What is the consolidated, uh, condensed,consolidated statement of operations?And what is the income statement?What is the income statements, right?So probably we are gonna get two,and then we go into a loop, we take these sub-questions,ask the vector database to give us chunks laterto these sub-questions.

Then take all those chunks, remove duplicates, take thatalong with this prompt, sendto a large language model, and get the response. So very likely we'll get a good response. Now, in this particular case, we are gettinga good response, uh, uh, irrespectively, right?Because it is simply enough, it's only like 90 pages,and then we are still getting a good response, right?So let's, let's look at, let's look at the,but I'm very interested to know the cost of,of this particular, uh, uh, strategy, right?So let's go back to our, uh, you know, uh,postman, postman here. So if you look at, uh, you know, uh,not using any vector database, it's at $2. 80, uh,using a simple, uh, retrieval strategy.

It is at, uh, 30, uh, 36 cents. And let's go to, uh, sub question, uh,parser here, right?Uh, and then I have this open. Let me send this the same file over to, uh,this particular API endpoint,which uses a sub subquestion retrieval strategy. And this time we have larger chunks, right?Because we have more, more chunks this time, which meansthat the cost is going to increase. And as you can see, we have spent, uh, 50 cents this timebecause you can,you can quite easily see it's a larger context compared to,let's say, this context, which is,which is a lot shorter compared to that one, right?So, um, so 50 cents,but the, the results, like, like I said,in this particular use case, right?The results are actually looking pretty good,no problem here, right?We got everything we wanted, security details, risk factors,all the right details,and then even the, the income from the current quarterand the same and the same quarter last year,we did get good results, but now we will twist this, right?What we'll do is we'll make simple strategy fail.

How do we do that? We'll make the prompt very,very complex right?Now, to tell you one thing, right?Of course, this, if you take a look at this particularproject, there are 10 prompts, right?And then this means that we'll haveto call the large language model 10 times. Not a good idea, right?No professional prom studio projectwill actually look like this. It'll be, it's usually very, very compressed, right?Because we want to save tokens, we want to save latency. Uh, right? So let's look at, for example, the same project,the same 10 fields we want to extract, uh,or rather the core information we want to extract. So we have something called the compact 10 Q parcelwith simple retrieval.

Let's go look at this, right? So this is this. Now, this becomes very interesting. I have a single prompt here. Now, what I've done is, hey, I've said, create a SO objectof the following structure with JSO objects under it. Now, this time we have disclosures, income details,and risk factors, and I'm describing the individual JSOobjects here in the same prompt.

I'm saying disclosure. This is how the, they, they,the sub objects should look like income details. This is how it should look like, right?Risk factors, it's a, it's a fairly simple one. Right?Now I have, again, two, uh,large language models configured. Uh, this time it's, it's not using a lotof tokens as you can see.

But then, uh, if you look at the results,I am seeing null here, let me,let me open, expand this up. Take a look at the, the,the response from these two largelanguage models side by side. First of all, we did not get the, the right,uh, values, right?We got null here, right? That is, that is very sad. But then again, if you look at, uh, uh, net incomeand, uh, here, this is actually wrong. We know that from the previous prompts, right?So it was 6 54 and 1 57, something like that.

This is also wrong right?Now, the problem is we are using a simple strategy. So you go to settings hereand look at the strategy we are usingas a simple retrieval strategy. The problem is this prompt,the whole large prompt is being sent to the vector database,and we are asking, give me relevant chunks. And the chunks along with the prompt are being sentto both these large language modelsand turns out that we did not get the right chunks. So if you look at this chunks,we are not finding any financial data that we need.

We are probably finding some risk information, right?But we're not finding the right financial data,and that's why we are seeing null here, right?So this is to be expected, right?So we have to understand howto use the vector database correctly,which is super important. Right? Now, I have the very same project,no change is made exactly the same prompt,but with sub question rie. So a complex prompt, right? The same complex prompt. But this time, what I've done is that in the settings,I have switched from a simple retrievalto sub-question retrieval. Now, what happens is that, like I explained earlier,we use a large language model to,and ask, ask it to generate some questions,which are then sent to, uh, you know,the, the vector database.

And this time, if you look at it, we havethe right values from both the large language models. Of course, there's a differencebecause the way the large language models aresummarizing the risk are different, right?We are asking it to highly summarize it, right?We said highly summarized value. So Claude is like going overboardand really, really summarizing, uh, you know, highly,it's generating a very concise summary, whereas, uh,GT four is still keeping the summary a little bit more, uh,uh, you know, ose, right?So, but hey, the great thing is now what's happenedis that although, uh, the prompt is exactly the same, simplyby switching from simple to sub questionable, we got the,the right answers, right?So, which is awesome, right?So, yeah, so now I, I'm hoping that, you know,you got a good idea about, you know, uh,the abstract platform, but also about, uh, malware and,and how you can use it to reduce cost, right?So let's quickly summarize it,and then we are kind of, uh, almost on time here,we'll take some questions. So we had the simple retrieval strategy,but then we also have the sub sub question, val strategy,excuse me, and where we use a large language model,you know, to, uh, generate retrieval related sub-questions. And in a loop, uh, we ask the, the vector databasefor relevant chunks.

We eliminate duplicates. Uh, of course, uh, you know, the, you are, you also realizethat the reason why simple strategy failed isbecause, you know, these chunks are all over the document. Uh, right? So it it, it was strugglingto get the right chunks without, uh,you know, sub-questions, right?And, uh, you know, of course, uh, if you really look at the,the summary of the whole, uh,the discussion here wasthat if you look at the full document,you usually get very high accuracybecause the large language model tendsto have the full context. In this case, it was only 30 pagesor so, so it would fit into the contextof a large language model, but the problem is the cost,although the accuracy is going to be really, really high,but it's not going to be viable if you use at scale, right?Then came simple retrieval, uh, it, it is the,the lowest costbecause, uh, typically you just get a few chunksand then you ask a large language model what it is,and then it's, it's gonna answer in, in our case,it was like eight text less than,you know, the full document. So this is where vector databases really shine for,uh, structuring use cases.

Um, but in general, for simple retrieval,you can't expect great accuracy, uh,especially when the prompts get more and more complex. Um, usually you should avoid using the strategy. Uh, it's okay for quick testingand stuff like that, uh, is usually fasterbecause a large language model is not involvedin generating sub-questions. So you, you tend to save some time and you know, some cost. But then again, it's not the best, uh,retrieval strategy out there.

Uh, sub-question strategy on the, on the other hand,just makes things so much better, right?So it understands the prompts,it generates the necessary sub-questions,it asks the large language model. You get pretty high accuracy, not as goodas the full document because nothing can beat having,having all the information, all the context. But then again, you know, uh, it comes a close second as faras accuracy is concerned,and then, you know, you, you can really, uh, leveragethe power of a vector database alongwith large language models to, to kind of, uh, solvefor this particular use case, right?So, um, that's our discussion here. We have some time I will hand it back over to Timto see, you know, what we can do. Yeah, we have, uh, we have some questions.

Uh, fortunately your colleague, uh,Naren has been answering some of them,but, uh, let me see which ones we, uh, haven't uh, answeredabout this one from Aaron Cohen,does your system report the confidence levelfor the extractionor allow for manual review and fine tuningThat, that that is correct, Aaron. So, uh, LLM challenge generates a score using a large line,second large language model for the extraction of the first. And, uh, manual review is alsosomething that is there in the system. So you can set the destination of a, uh,of a prom studio project as a manual review queuewhere you get the source documentand the extracted value side by side. If you click on the extracted value,it'll highlight it irrespectiveof whether the value has changedor not, uh, the format, you know,you would've changed significantly.

It doesn't matter. It'll, uh, I mean highlighted in, in the,in the source document, uh, right. So, uh, and,and yeah, you, you can, you can always do that, yes. Okay, we got another one. Uh, so is unstr different from unstructured IOand llama parse in that with those,you get generic extractions even if they're powered by lms?Well, UNSTR allows you to specify promptsfor field of interest.

That is correct. So unstructured IOand LAMA parts are text extraction services. So you, you prepare documentsfor consumption by large language models. So, and, and unstr is purpose built for unstructured, uh,data extraction, structured data extractionfrom unstructured documents. So LLM Whisperer is the equivalent of unstructured Dand LAMA parts, but the o the open source unstuck platformis, is a lot more.

And then you have LLM challengeand all the other other stuff as well. Yeah,Very cool. Uh, that one was answered. Are we trying to extract exact values or summaries analysis?Probably both are possible. Yeah, both are possible, right?So you want to extract exact valuesand which is super important, right?So when you structure unstructured documents, you, you wantto do that repeatably, that's where, you know,pro Studio comes in.

Very cool. I think we got time for one more here. Can you comment more on reliability of getting LLMto decompose complex query into sub questions?What is the accuracy and success rate?That's a great question, right?So here's the thing, right?This is where LLMsand Vector database work in tandem, right?And you have to tune your prompt, give it a lot of document. That's why you can load a lotof sample documents in Prompt Studio. The, I said representative sample.

So you have to give number one a good representative sample. Usually we recommend 15 to 20 documents,which are within the same document. Type a huge variation, and then tweak the prompts. Make sure that you're getting good chunks from the,from the Vector database. You can view them in the ui, make sure that they, you,you are indeed getting good chunks.

And then, you know, you, you tweak your promptsand you, you ensure that you indeed are getting,depending on the last language model, the qualityof the sub-questions will change. And, uh, based on, you know, what you're trying to extract,you will have to kind of fine tune and then go from there. Pretty cool. Um, this one's interesting. I don't know what they were referring to.

Maybe this will make sense to you. Oscar Monroe asked,interested in current model architecturesthat are being showcased for use of my own projects. Will this be available? The concepts were great. Yeah. So, uh, I I'm assuming that you have some kindof a fine tuned model yourself.

Yeah, so one of the, uh, connectors that Ract has is, uh,the, uh, O Lama connector. So you can host your model on o lama, connect to it, uh,and, and yeah, so absolutelythat's something you, you can do. Very cool. I think we're out of time. I think that was pretty awesome webinar.

Get a bunch of good questions,decent number of people attended. We will provide the slidesand we'll provide the, uh, recording of this pretty soon. And it was great having you hereand I can't wait to, we have abstract in person. I'm gonna make you go through some of my documents maybe,and then we'll, uh, we'll have, uh, a cool,uh, meetup there. But, uh, thanks for everybody for coming.

Thanks for the great questions and uh, we will see you. I think we got another webinar next week. We'll put that in there. There's tonof great stuff going on, and thanks againEveryone. Thank you hosting us.

Thank you. Yeah, anytime. It was awesome. Bye. See you everyone.

Meet the Speaker

Join the session for live Q&A with the speaker

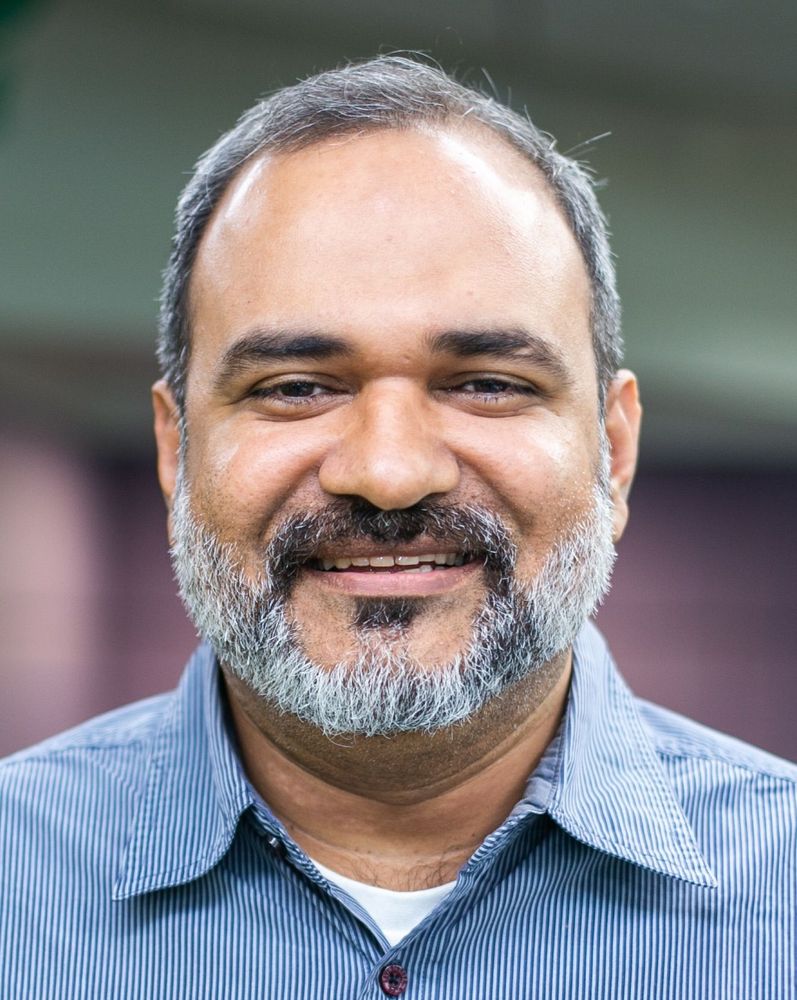

Shuveb Hussain

Co-Founder & CEO, Unstract

Shuveb Hussain is the co-founder and CEO at Unstract, an open source startup building an LLM-powered platform that extracts data from unstructured documents, helping automate critical business processes. Before co-founding Unstract, he was VP of Platforms Engineering at Freshworks (NASDAQ: FRSH). He’s also an electronics hobbyist. He no longer voluntarily corrects spelling and grammar mistakes committed by others.