OpenAI's ChatGPT

A guide to the new AI Stack - ChatGPT, your Vector Database, and Prompt as code

Read the entire series

- OpenAI's ChatGPT

- Unlocking the Secrets of GPT-4.0 and Large Language Models

- Top LLMs of 2024: Only the Worthy

- Large Language Models and Search

- Introduction to the Falcon 180B Large Language Model (LLM)

- OpenAI Whisper: Transforming Speech-to-Text with Advanced AI

- Exploring OpenAI CLIP: The Future of Multi-Modal AI Learning

- What are Private LLMs? Running Large Language Models Privately - privateGPT and Beyond

- LLM-Eval: A Streamlined Approach to Evaluating LLM Conversations

- Mastering Cohere's Reranker for Enhanced AI Performance

- Efficient Memory Management for Large Language Model Serving with PagedAttention

- LoRA Explained: Low-Rank Adaptation for Fine-Tuning LLMs

- Knowledge Distillation: Transferring Knowledge from Large, Computationally Expensive LLMs to Smaller Ones Without Sacrificing Validity

- RouteLLM: An Open-Source Framework for Navigating Cost-Quality Trade-Offs in LLM Deployment

- Prover-Verifier Games Improve Legibility of LLM Outputs

- Be like a Goldfish, Don't Memorize! Mitigating Memorization in Generative LLMs

- Unlocking the Power of Many-Shot In-Context Learning in LLMs

- Spotting LLMs With Binoculars: Zero-Shot Detection of Machine-Generated Text

- Teaching LLMs to Rank Better: The Power of Fine-Grained Relevance Scoring

- Everything You Need to Know About LLM Guardrails

- Chain-of-Retrieval Augmented Generation

- Chain of Agents (COA): Large Language Models Collaborating on Long-Context Tasks

Background on OpenAI's ChatGPT

ChatGPT (Chat Generative Pre-trained Transformer) is an exceptional and crucial tool based on the GPT-3.5 and GPT-4 families of large language models (LLMs) the Open AI team built and has been fine-tuned using supervised and reinforcement learning techniques. The developers at Open AI designed ChatGPT to allow users to make natural-language inquiries. What makes ChatGPT unique is that it has been pre-trained on vast amounts of text data, including social media posts, news articles, and other sources of human communication. As a result, ChatGPT can generate natural and relevant responses to various text inputs thanks to its extensive pre-training. For example, if you ask ChatGPT a question about a particular topic, it will be able to provide you with a comprehensive response that is both relevant and informative. Additionally, ChatGPT is incredibly user-friendly and accessible to anyone who has basic computer skills. Thus, it is a valuable tool for anyone who uses natural language processing in their work or research.

Screenshot 2023-05-09 at 8.56.16 PM.png

Screenshot 2023-05-09 at 8.56.16 PM.png

ChatGPT Basics

ChatGPT is designed to simulate human conversations and can be deployed on web applications, mobile applications, and social media platforms. To use ChatGPT, a user needs to type in their query in the chat window, and the chatbot will analyze the question using Natural Language Processing (NLP) algorithms. It then provides the user with the most relevant response based on the analyzed data.

ChatGPT can be customized to suit different user needs. For example, users can choose the chatbot's tone, language, and style to make it more personalized.

ChatGPT is constantly learning and improving its responses by analyzing user feedback. It is also integrated with other technology tools, such as machine learning and deep learning algorithms, to enhance its accuracy and efficiency.

Can ChatGPT write software?

ChatGPT can generate text, including code, in various programming languages like Python, Java, JavaScript, C++, and more. However, the code generated by ChatGPT may only sometimes be correct or optimal, and it should be reviewed and tested by a human programmer before being used in production.

ChatGPT's ability to write code is also limited to the programming paradigms, libraries, and syntax it has been trained on. Therefore, it may only be able to generate code for some specific or specialized domains since the model was trained on a particular dataset, and it can only generate code within the scope of that dataset.

Although ChatGPT can generate code, it is not a substitute for human programmers. A human programmer can provide context, insights, and experience that an AI model lacks. For instance, a programmer can ensure the code is optimized, efficient, and adheres to industry standards.

Limitations of ChatGPT

One of the main limitations of ChatGPT is its inability to understand the context of a conversation. While it can generate responses based on the words and phrases used in a conversation, it may need help understanding their underlying meaning. This can result in responses that are irrelevant or inappropriate to the conversation. Other limitations to note:

Limited Knowledge Base

A limitation of ChatGPT is its limited knowledge base. While it can access vast information, it may need more specific information to answer certain questions. This limitation, sometimes referred to as "hallucinations" can result in nonsensical answers and need to be corrected or completed. You can see a few examples of hallucinations compared to the correct answers in this blog post.

Lack of Context

One of the main limitations of ChatGPT is its inability to understand the context of a conversation. While it can generate responses based on the words and phrases used in a conversation, it may need help understanding their underlying meaning. This lack of context can result in irrelevant or inappropriate responses to the discussion.

Bias

Like all language models, ChatGPT may exhibit bias in its responses. This bias is because it learns from the data it is trained on, which may contain biases and stereotypes. This can result in responses that are discriminatory or offensive.

Lack of Emotional Intelligence

ChatGPT is not capable of understanding emotions or empathizing with users. This lack of emotional intelligence can result in tone-deaf or insensitive responses to the user's emotional state.

Limited Multilingual Support

ChatGPT is primarily trained on English data, which may be less effective in other languages. While it can generate responses in different languages, the quality and accuracy of these responses may be lower than those generated in English.

While ChatGPT is a powerful technology, it is vital to be aware of its limitations and use it appropriately.

What are some ChatGPT use cases?

ChatGPT, a state-of-the-art language model, can be used in various applications to automate tasks and provide personalized assistance to users. Here are some of the use cases for ChatGPT:

Virtual Assistance: ChatGPT can be integrated into virtual assistants like Siri or Alexa to provide human-like responses to user queries. Virtual assistants can improve the overall user experience and reduce the workload of human assistants.

Support Chatbots: ChatGPT can be used to create support chatbots that can handle customer queries and provide relevant solutions. With ChatGPT, chatbots can understand user intent and provide personalized responses based on the context.

Content Creation: ChatGPT can generate text for content creation applications like article writing, email marketing, and social media posts. Providing ChatGPT with a topic or prompt can generate high-quality content within seconds.

Language Translation: ChatGPT can translate content from one language to another. This feature can improve communication between people who speak different languages or provide localized content to users.

These are just a few examples of how ChatGPT can automate tasks and improve user experiences. As ChatGPT continues to evolve, more use cases will emerge, making it an essential tool for businesses and individuals.

Can ChatGPT be integrated with other applications?

OpenAI provides a set of APIs and Libraries to allow developers to integrate their applications with ChatGPT. The OpenAI supported libraries are Python and Node.js but the community has already provided impressive support for C#/.Net, Crystal, Go, Java, Kotlin, PHP, R, Ruby, Scala, Swift and Unity. Tools and plugins that work with ChatGPT

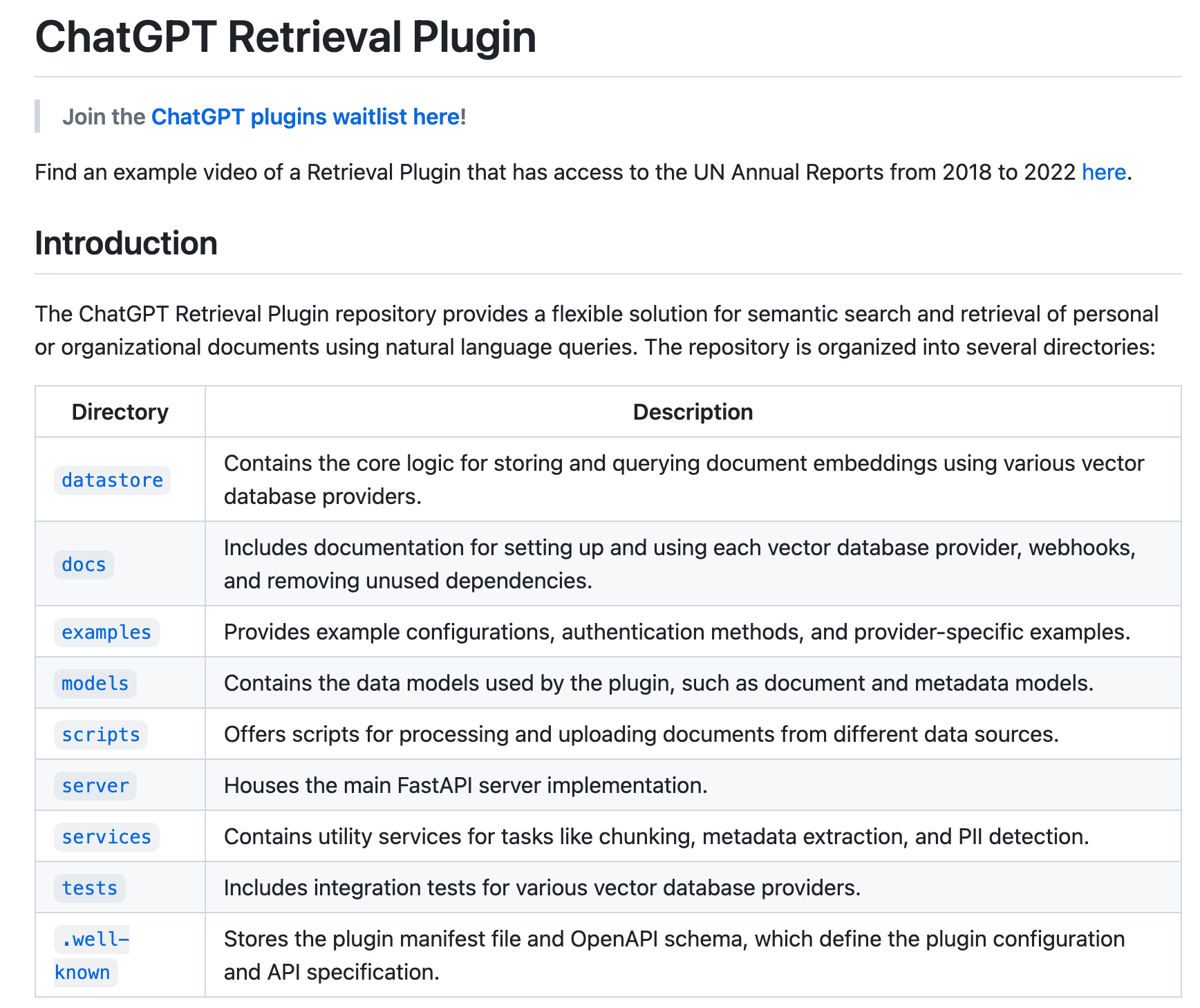

ChatGPT Retrieval Plugins

The Knowledge Base Retrieval Plugin is a tool that allows ChatGPT to extract important information from document snippets hosted by an external party. By expressing needs or asking questions in natural language, ChatGPT can retrieve necessary data from various sources such as files, notes, or emails that you have collected and stored. This feature helps ChatGPT to provide accurate and reliable information quickly and efficiently.

ChatGPT Retrieval Plugin GitHub Repository

ChatGPT Retrieval Plugin GitHub Repository

There is a Retrieval Plugin for both Milvus and Zilliz, and enterprises can benefit from this plugin by streamlining internal document retrieval with ChatGPT Plugin. By using this plugin, employees can access accurate and up-to-date information from a single source. Additionally, developers can utilize the plugin to create and manage their own knowledge bases.

AutoGPT

Auto-GPT is a Python application recently shared by a developer named Significant Gravitas on GitHub. Utilizing GPT-4 as its foundation, the program empowers the AI to function independently without requiring prompts from the user for each action. The notion of “AI agents” arises from this feature, whereby the AI can use the internet and execute commands on a computer autonomously without any user intervention.

Mini-GPT-4

MiniGPT-4 is a cutting-edge vision-language model that enhances the understanding of visual and textual information through advanced large language models. It includes a vision encoder featuring pretrained ViT and Q-Former, a single linear projection layer, and an advanced Vicuna large language model. To align the visual features with the Vicuna, MiniGPT-4 only requires training the linear projection layer. MiniGPT-4 has many capabilities similar to GPT-4, such as the ability to generate detailed image descriptions and to create websites from hand-written drafts. Additionally, it has other emerging capabilities, such as writing stories and poems inspired by given images, providing solutions to problems shown in images, and teaching users how to cook based on food photos.

Introducing the new AI stack - ChatGPT+Vector Database+Prompt-as-code (CVP)

ChatGPT is impressive, and now it is time to highlight how a vector database can minimize the handicap of ChatGPT's limited knowledge base, sometimes resulting in it hallucinating answers when asked about unfamiliar topics. Introducing the new AI stack, ChatGPT, Vector database, and prompt-as-code or the CVP Stack. ChatGPT does a great job of answering natural language questions. Combined with a prompt that ties the user's query and the retrieved text passages, it can alleviate ChatGPT from putting out those "hallucinating answers" and instead have ChatGPT generate a relevant and accurate response.

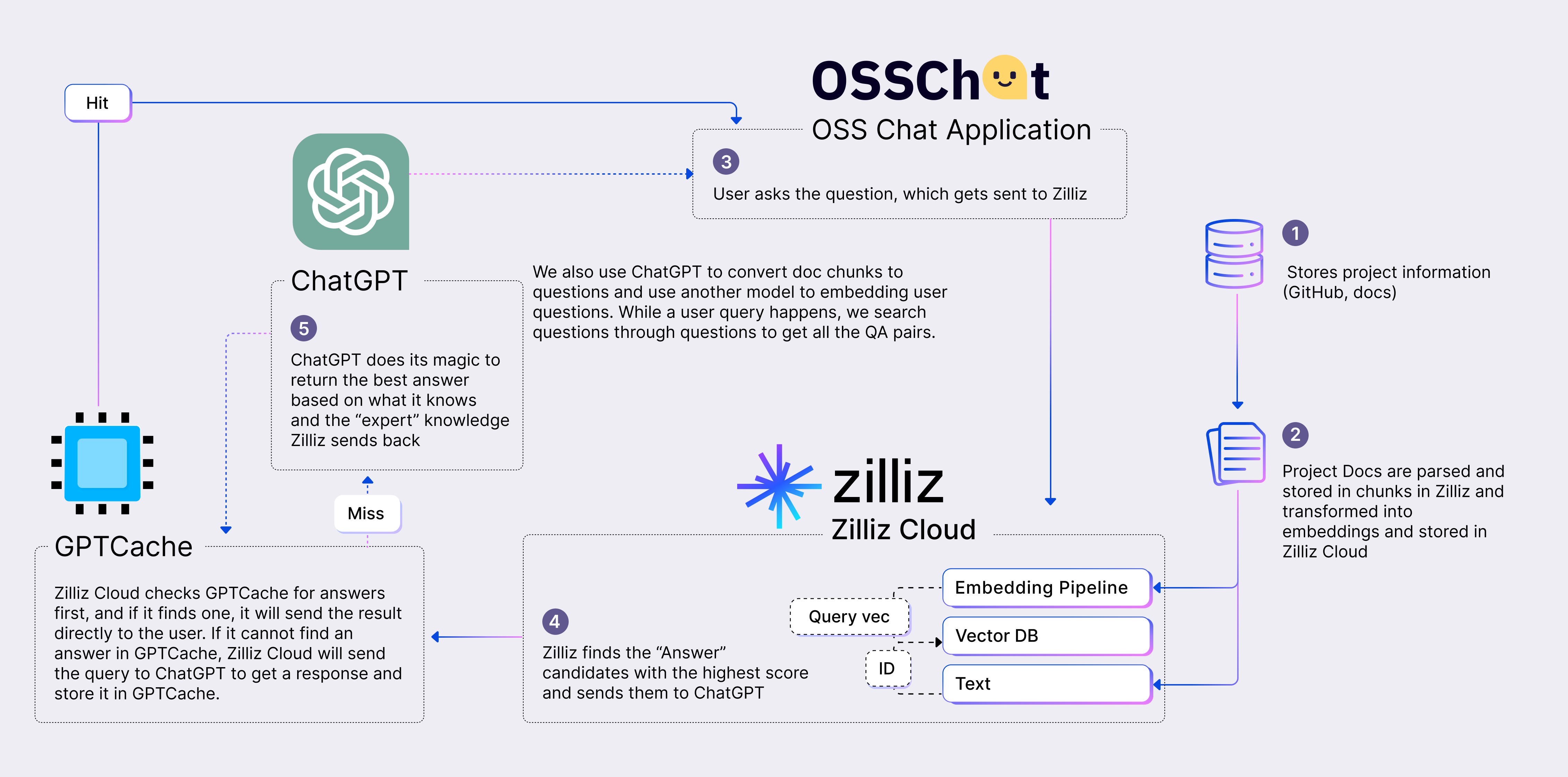

OSS Chat: A Chatbot for Technical Knowledge about Open-Source Projects

OSS Chat is a chatbot that provides technical knowledge about your favorite open-source projects. The current release of OSS Chat supports Hugging Face, Pytorch, Mini-GPT-4, Auto-GPT, OpenCV, LangChain, Next.js, Ray, React, Vue.js, React Native and Milvus, but we plan to expand it to several other popular open-source projects. OSS Chat was built using OpenAI's ChatGPT and a vector database, Zilliz. The best part? We are offering it as a free service to anyone who uses it. If you want your open-source project to be listed, let us know.

OSS Chat diagram.png

OSS Chat diagram.png

ChatGPT is an excellent technology for answering natural language queries. However, it has constraints due to its limited knowledge base, sometimes resulting in hallucinating answers when asked about unfamiliar topics.

To overcome this constraint, we have introduced the new AI stack, ChatGPT + Vector database + prompt-as-code, which we call the CVP Stack.

When ChatGPT is combined with a prompt that links the user's query and the retrieved text, it generates a relevant and accurate response. This approach can alleviate ChatGPT from providing "hallucinating answers."

We have built OSS Chat as a working demonstration of the CVP stack. It leverages various GitHub repositories of open-source projects and their associated doc pages as the source of truth. We convert this data into embeddings and store them in Zilliz, and the related content in a separate data store.

When users interact with OSS Chat by asking questions about any open-source project, we trigger a similarity search in Zilliz to find a relevant match. The retrieved data is fed into ChatGPT to generate a precise and accurate response.

Building a Semantic Cache for Storing LLM Responses: GPTCache

A semantic cache can be a powerful tool that can bring several benefits to your application. Here are some of the benefits that you can expect when you build a semantic cache for storing LLM responses:

Improved Performance. Storing LLM responses in a cache can significantly reduce the time it takes to retrieve the response. This is especially true when the response has been previously requested and is already present in the cache. Storing responses in a cache can improve the overall performance of your application.

Reduced Expenses. Most LLM services charge fees based on a combination of the number of requests and token count. Caching LLM responses can help reduce the number of API calls made to the service, translating into cost savings. This is particularly relevant when dealing with high traffic levels, where API call expenses can be substantial.

Better Scalability. Caching LLM responses can improve the scalability of your application by reducing the load on the LLM service. Caching helps avoid bottlenecks and ensures that the application can handle a growing number of requests.

Customization. A semantic cache can be customized to store responses based on specific requirements, such as the type of input, the output format, or the length of the response. This can help optimize the cache and make it more efficient.

Reducing Network Latency. A semantic cache located closer to the user can reduce the time it takes to retrieve data from the LLM service. By reducing network latency, you can improve the overall user experience.

Building a semantic cache for storing LLM responses can bring several benefits, including improved performance, reduced expenses, better scalability, customization, and reduced network latency. With this in mind, we built and open-sourced GPTCache.

GPTCache is an open-source tool designed to improve the efficiency and speed of GPT-based applications by implementing a cache to store the responses generated by language models. GPTCache allows users to customize the cache according to their needs, including options for embedding functions, similarity evaluation functions, storage location and eviction. In addition, GPTCache currently supports the OpenAI ChatGPT interface and the LangChain interface.

Key Resources

- Background on OpenAI's ChatGPT

- ChatGPT Basics

- Can ChatGPT write software?

- Limitations of ChatGPT

- What are some ChatGPT use cases?

- Can ChatGPT be integrated with other applications?

- Introducing the new AI stack - ChatGPT+Vector Database+Prompt-as-code (CVP)

- OSS Chat: A Chatbot for Technical Knowledge about Open-Source Projects

- Building a Semantic Cache for Storing LLM Responses: GPTCache

- Key Resources

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

LoRA Explained: Low-Rank Adaptation for Fine-Tuning LLMs

LoRA (Low-Rank Adaptation) is a technique for efficiently fine-tuning LLMs by introducing low-rank trainable weight matrices into specific model layers.

Teaching LLMs to Rank Better: The Power of Fine-Grained Relevance Scoring

We’ll explore the limitations of binary relevance labels, how fine-grained relevance scoring works, and why it’s a game-changer for zero-shot text rankers

Chain of Agents (COA): Large Language Models Collaborating on Long-Context Tasks

Discover how Chain-of-Agents enhances Large Language Models by effectively managing context injection, improving response quality while addressing token limitations.